Difference between revisions of "Proj-2013-2014-BrasRobotique-1/SRS"

| (49 intermediate revisions by 2 users not shown) | |||

| Line 15: | Line 15: | ||

!scope="row" | |

!scope="row" | |

||

| 0.1.0 |

| 0.1.0 |

||

| + | |||

| − | | Février 2014 |

||

| + | |||

| + | 0.1.1 |

||

| + | |||

| + | 1.0.1 |

||

| + | | |

||

| + | |||

| + | February 2014 |

||

| + | |||

| + | |||

| + | April 2014 |

||

| + | |||

| + | April 2014 |

||

| El Hadji Malick Fall |

| El Hadji Malick Fall |

||

Adji Ndèye Ndaté Sambe |

Adji Ndèye Ndaté Sambe |

||

| + | |||

| − | | TBC |

||

| + | El Hadji Malick Fall |

||

| − | | TBC |

||

| + | |||

| − | | TBC |

||

| + | Adji Ndèye Ndaté Sambe |

||

| + | | First description |

||

| + | |||

| + | |||

| + | |||

| + | Second description |

||

| + | |||

| + | Third description |

||

| + | | El Hadji Malick Fall |

||

| + | Adji Ndeye Ndate Sambe |

||

| + | |||

| + | Adji Ndeye Ndate Sambe |

||

| + | |||

| + | El Hadji Malick Fall |

||

| + | | |

||

| + | February, 15th 2014 |

||

| + | |||

| + | |||

| + | April, 5th 2014 |

||

| + | |||

| + | April, 6th 2014 |

||

|} |

|} |

||

| Line 31: | Line 64: | ||

The main goals of this document which describes the robotic arm project are : |

The main goals of this document which describes the robotic arm project are : |

||

| − | *allow people to discover the technology we will develop |

+ | *allow people to discover the technology we will develop |

| + | *explain the approach adopted and solutions we have proposed |

||

*provide a working basis for future improvements |

*provide a working basis for future improvements |

||

| Line 90: | Line 124: | ||

* Calculate its coordinates |

* Calculate its coordinates |

||

* Return the marker position |

* Return the marker position |

||

| − | * Save the coordinates of the |

+ | * Save the coordinates of the marker on an XML file |

| + | |||

'''Data transmission''' |

'''Data transmission''' |

||

| Line 98: | Line 133: | ||

* Connect to the robot using the client / server model based on the system of agents |

* Connect to the robot using the client / server model based on the system of agents |

||

* Send the XML file which contains the position of the marker |

* Send the XML file which contains the position of the marker |

||

| + | |||

'''Simulation''' |

'''Simulation''' |

||

| Line 116: | Line 152: | ||

==2.5 Assumptions and dependencies== |

==2.5 Assumptions and dependencies== |

||

| + | The system is based on ARUCO which enables programm to get back the position of the marker and SPADE which make the data transmission through an XML file possible. |

||

=3.Specific requirements, covering functional, non-functional and interface requirements= |

=3.Specific requirements, covering functional, non-functional and interface requirements= |

||

| Line 125: | Line 162: | ||

==3.1 Requirement X.Y.Z (in Structured Natural Language)== |

==3.1 Requirement X.Y.Z (in Structured Natural Language)== |

||

| − | '''Function''': |

||

| + | This diagram below describes how our project works. |

||

| − | '''Description''': |

||

| + | |||

| + | [[File:GlobalArchitecture.png|400px|thumb|center]] |

||

| + | |||

| + | First, the Client Agent launches the detection with ArUco and gets a marker vector. It applies then a regular expression that allows him to extract the coordinates of |

||

| + | marker vector and transform them into XML tags that are successively transmitted to the Agent Server that receives them in order to form the file that will allow him to begin its simulation. |

||

| + | |||

| + | === 3.1.1 Markers detection === |

||

| + | |||

| + | '''Description''': The camera detects the marker on the object and locates it |

||

| + | |||

| + | '''Inputs''': Video stream and markers |

||

| + | |||

| + | '''Source''': ArUco library detection |

||

| + | |||

| + | '''Outputs''': Video stream with markers highlighted with their id and an file which contains one of the found marker vector (its id and the coordinates of the four points which is made up of) |

||

| + | |||

| + | '''Destination''': All people, all object which can be taken by the robot |

||

| + | |||

| + | '''Action''': |

||

| + | The ArUco library detects borders and analyzes in rectangular regions which may be markers. When a marker is found, its vector is write into a file. |

||

| + | |||

| + | '''Non functional requirements''': The marker detection should be done in real time and faster |

||

| + | |||

| + | '''Pre-condition''': Have a camera and ArUco library installed |

||

| + | |||

| + | '''Post-condition''': The marker has to be well recognized. |

||

| + | |||

| + | '''Side-effects''': Failure of the marker recognition |

||

| + | |||

| + | === 3.1.2 Coordinates transfer === |

||

| + | |||

| + | '''Description''': Coordinates are extracted in the marker vector and transferred as XML Tags |

||

| + | |||

| + | '''Inputs''': A file |

||

| + | |||

| + | '''Source''': Spade Agents (Client and Server), a platform connexion |

||

| + | |||

| + | '''Outputs''': An XML file |

||

| + | |||

| + | '''Destination''': The Agent Server |

||

| + | |||

| + | '''Action''': |

||

| + | The Agent Client applies a regular expression to extract coordinates into the file and send it to the Server |

||

| + | |||

| + | '''Non functional requirements''': The messages have to be delivered quickly and arrive in order. A message must not be lost. |

||

| + | |||

| + | '''Pre-condition''': Download, configure and launch SPADE platform connexion (in localhost or in a specific IP adress) |

||

| + | |||

| + | '''Post-condition''': All messages have reached their destination in order |

||

| + | |||

| + | '''Side-effects''': Acknowledgments are sent by the server |

||

| + | |||

| + | === 3.1.3 Graphic Simulation === |

||

| + | |||

| + | '''Description''': The XML file is parsed and then the marker position is calculted and displayed on a graphic interface |

||

| − | '''Inputs''': |

+ | '''Inputs''': An XML file |

| − | '''Source''': |

+ | '''Source''': Python xmldom and TKinter librairies |

| − | '''Outputs''': |

+ | '''Outputs''': A graphical interface |

| − | '''Destination''': |

+ | '''Destination''': To the robot and people who used it (as the other group) |

'''Action''': |

'''Action''': |

||

| + | The Server Agent interprets XML tags and gets back the coordinates of the corresponding point and display it. The point depicting the robot is also displayed and can be moved with the keyboard |

||

| − | * Natural language sentences (with MUST, MAY, SHALL) |

||

| − | * Graphical Notations : UML Sequence w/o collaboration diagrams, Process maps, Task Analysis (HTA, CTT) |

||

| − | * Mathematical Notations |

||

| − | * Tabular notations for several (condition --> action) tuples |

||

| − | '''Non functional requirements''': |

+ | '''Non functional requirements''': The version of the XML file must be 1.0. |

| − | '''Pre-condition''': |

+ | '''Pre-condition''': The XML file is readable and contains no error |

| − | '''Post-condition''': |

+ | '''Post-condition''': The point is displayed on the screen with the exact coordinates given in parameter |

| − | '''Side-effects''': |

+ | '''Side-effects''': A pop-up window is generated when the robot reachs the marker's position |

=4. Product evolution= |

=4. Product evolution= |

||

Latest revision as of 06:53, 19 April 2014

The document provides a template of the Software Requirements Specification (SRS). It is inspired of the IEEE/ANSI 830-1998 Standard.

| Version | Date | Authors | Description | Validator | Validation Date | |

|---|---|---|---|---|---|---|

| 0.1.0

1.0.1 |

February 2014

April 2014 |

El Hadji Malick Fall

Adji Ndèye Ndaté Sambe El Hadji Malick Fall Adji Ndèye Ndaté Sambe |

First description

Second description Third description |

El Hadji Malick Fall

Adji Ndeye Ndate Sambe Adji Ndeye Ndate Sambe El Hadji Malick Fall |

February, 15th 2014

April, 6th 2014 |

1. Introduction

1.1 Purpose of the requirements document

The main goals of this document which describes the robotic arm project are :

- allow people to discover the technology we will develop

- explain the approach adopted and solutions we have proposed

- provide a working basis for future improvements

1.2 Scope of the product

The long-term goal of this project is to develop a control software manipulator arm for support of persons with disabilities. There are commercial products of arms which are unfortunately too expensive and does not have control system high levels. An example is the robotic arm Jaco.

1.3 Definitions, acronyms and abbreviations

Aruco

- It is a library for augmented reality applications based on OpenCV

Marker

- The library relies on the use of coded markers. Each marker has an unique code indicated by the black and white colors in it. The libary detect borders, and analyzes into the rectangular regions which of them are likely to be markers. Then, a decoding is performed and if the code is valid, it is considered that the rectangle is a marker.

OpenCV

- OpenCv ( Open Computer Vision) is a free graphic library mainly designed for real-time image processing.

SPADE

- SPADE (Smart Python multi-Agent Devepment Environment) is multiagent and organizations platform based on the XMPP technology and written in the Python programming language. The SPADE Agent Library is a module for the Python programming language for building SPADE agents.XMPP is the Extensible Messaging and Presence Protocol, used for instant messaging, presence, multi-party chat, voice and video calls, etc.

1.4 References

General :

This project is a school project, located in Polytech Grenoble, and supported by AIR The project page on air wiki is available through this link [1]

Technical :

2011-2012 Project page on the AIR wiki : - http://air.imag.fr/index.php/Proj-2011-2012-BrasRobotiqueHandicap

Librairies:

Aruco - http://www.uco.es/investiga/grupos/ava/node/26

OpenCV - http://opencv.org/

SPADE - https://github.com/javipalanca/spade

1.5 Overview of the remainder of the document

The remainder of this document will present the technical characteristics of the project such as requirements and constraints, and user characteristics. Section three outlines the detailed, specific and functional requirements, performance, system and other related requirements of the project. Supporting information about appendices is also provided in this same section.

2. General description

The long-term goal of this project is to develop control software manipulator arm for supporting people with disabilities. There are commercial products of robotic arms which are unfortunately too expensive and does not have high level control system. Therefore, the aim is to develop a robotic arm which will be able to perform series of movements using markers. The part described in this document will focus on the detection of markers.

2.1 Product perspective

We have to consider the possible evolution of this project.

2.2 Product functions

Marker detection

Initially, From a webcam placed on the robotic arm, it should be possible to, in real time:

- Detect a predefined type of marker placed on an object

- Calculate its coordinates

- Return the marker position

- Save the coordinates of the marker on an XML file

Data transmission

Secondly, send the obtained data to the robot to enable it to move to this object. This requires several steps:

- Connect to the robot using the client / server model based on the system of agents

- Send the XML file which contains the position of the marker

Simulation

The simulator have to use this information to make the robot move in the right direction.

2.3 User characteristics

This project is intended to disabled people. The robotic arm will allow them to grab distant objects. The arm can be remote-controlled. Therefore, users can be developers who can create new sequences of instructions given to the robot.

2.4 General constraints

- Unlike existing technologies, our robotic arm must have the feature of being able to detect an object with a marker and get it back.

- It must also be able to interpret a series of instructions that will be given to him.

- A format is imposed for writing data : XML.

- The file format that records the result of the detection should not be changed. This may affect the detection of the position of the object when moving it.

- The marker detection is written in C++

- The system of agents is written in Python

- The simulator is written in Python

2.5 Assumptions and dependencies

The system is based on ARUCO which enables programm to get back the position of the marker and SPADE which make the data transmission through an XML file possible.

3.Specific requirements, covering functional, non-functional and interface requirements

- document external interfaces,

- describe system functionality and performance

- specify logical database requirements,

- design constraints,

- emergent system properties and quality characteristics.

3.1 Requirement X.Y.Z (in Structured Natural Language)

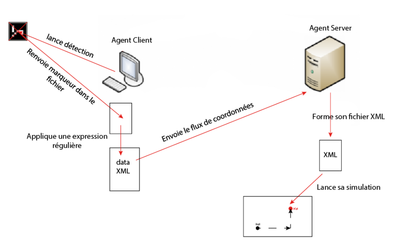

This diagram below describes how our project works.

First, the Client Agent launches the detection with ArUco and gets a marker vector. It applies then a regular expression that allows him to extract the coordinates of marker vector and transform them into XML tags that are successively transmitted to the Agent Server that receives them in order to form the file that will allow him to begin its simulation.

3.1.1 Markers detection

Description: The camera detects the marker on the object and locates it

Inputs: Video stream and markers

Source: ArUco library detection

Outputs: Video stream with markers highlighted with their id and an file which contains one of the found marker vector (its id and the coordinates of the four points which is made up of)

Destination: All people, all object which can be taken by the robot

Action: The ArUco library detects borders and analyzes in rectangular regions which may be markers. When a marker is found, its vector is write into a file.

Non functional requirements: The marker detection should be done in real time and faster

Pre-condition: Have a camera and ArUco library installed

Post-condition: The marker has to be well recognized.

Side-effects: Failure of the marker recognition

3.1.2 Coordinates transfer

Description: Coordinates are extracted in the marker vector and transferred as XML Tags

Inputs: A file

Source: Spade Agents (Client and Server), a platform connexion

Outputs: An XML file

Destination: The Agent Server

Action: The Agent Client applies a regular expression to extract coordinates into the file and send it to the Server

Non functional requirements: The messages have to be delivered quickly and arrive in order. A message must not be lost.

Pre-condition: Download, configure and launch SPADE platform connexion (in localhost or in a specific IP adress)

Post-condition: All messages have reached their destination in order

Side-effects: Acknowledgments are sent by the server

3.1.3 Graphic Simulation

Description: The XML file is parsed and then the marker position is calculted and displayed on a graphic interface

Inputs: An XML file

Source: Python xmldom and TKinter librairies

Outputs: A graphical interface

Destination: To the robot and people who used it (as the other group)

Action: The Server Agent interprets XML tags and gets back the coordinates of the corresponding point and display it. The point depicting the robot is also displayed and can be moved with the keyboard

Non functional requirements: The version of the XML file must be 1.0.

Pre-condition: The XML file is readable and contains no error

Post-condition: The point is displayed on the screen with the exact coordinates given in parameter

Side-effects: A pop-up window is generated when the robot reachs the marker's position

4. Product evolution

- Remote-control

- Use several types of markers

- Send coordinates in real time on an interface

- Giving to the robot type of markers to detect as parameters

- Automatic recalibration of the position of the robot if the detected marker position is not optimal

5. Appendices

5.1 Specification

- The global project's page can be found here

- An other RICM4 group is working on this project. Their wiki page can be found there: