Difference between revisions of "Projets-2016-2017-VideoConference"

Alice.Rivoal (talk | contribs) (→Week4) |

(→Week12) |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

= General constraints:= |

= General constraints:= |

||

| − | In order to work correctly, this software |

+ | In order to work correctly, this software has to be run with google Chrome or android because of the technologies that it uses such as webRTC that is not compatible with any other technologies aside from these two. |

=week1:= |

=week1:= |

||

| Line 43: | Line 43: | ||

=Week5= |

=Week5= |

||

added a code that allows the program to run on our mobile phone. |

added a code that allows the program to run on our mobile phone. |

||

| − | * We added a file in cs folder so that all we have to do to run the program on our phone was to type : 192.168.43.253:5000/server.html |

+ | * We added a file in cs folder so that all we have to do to run the program on our phone was to type : 192.168.43.253:5000/server.html and the html program runs correctly. |

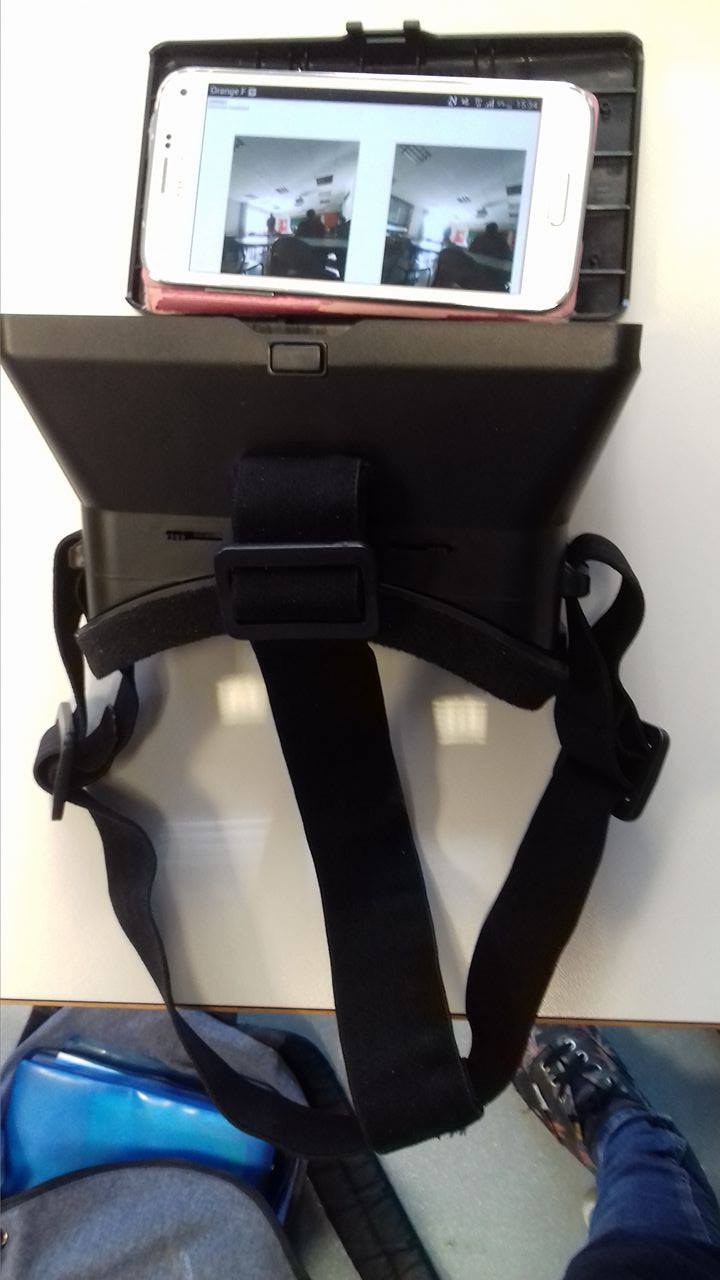

[[ File:17121626 1404030216341039 1967222825 o.jpg ]] |

[[ File:17121626 1404030216341039 1967222825 o.jpg ]] |

||

=Week6= |

=Week6= |

||

| − | tried to simplify our architecture and use only one computer to run the client and server on the same |

+ | tried to simplify our architecture and use only one computer to run the client and server on the same device. |

| − | We |

+ | We soon realised that with http we won't be able to achieve our goal because of security matters => |

searching how to convert http to https. |

searching how to convert http to https. |

||

=Week7= |

=Week7= |

||

| + | <holidays> |

||

| ⚫ | |||

| + | =Week8= |

||

| ⚫ | |||

| + | |||

| + | =Week9= |

||

| + | * Once again we went to fablab and got a code to generate security certificate. |

||

| + | * to secure the connection between the diferent actors of the project we first created ssl folder in css and uploaded two files that we run one after another. |

||

| + | *the first one generated a certificate that had to be downloaded by each other device involved in the connection(the phone). |

||

| + | *for the computer (that is also a server). We added this certificate to chrome by following these steps:Parametres -> Afficher parametres avancés ->in HTTPS (gerer les certificats) |

||

| + | *we also modified the httpserver (express) so that it would use https instead of http so that the whole code would be compatible. |

||

| + | *For this part because most of the methods and functions used in our project were not available anymore we had to update the code. and replace some of the methods with more recent ones. |

||

| + | *Finally we fixed our material. |

||

| + | |||

| + | =Week10= |

||

| + | *Implemented the part in which we collect the phone's motion data. (this part runs perfectly). to test it at this stage of the program we used alerts instead of the console.log because the data remains in the phone. |

||

| + | *still working on the code's upgrade |

||

| + | *still need to send the phone's motion information back to the server to pilot our cameras. |

||

| + | =Week11= |

||

| + | *We started to code an http server to record the phone's position on the computer. But we realised very soon that with the certificate that we made at first ( when we switched from http to https) this method won't work. |

||

| + | *Instead of using HTTP we decided to use udp(using:https://developer.chrome.com/apps/socket#method-read"). |

||

| + | *but when we tested our program we realised that the functions are not up_to_date which means not available anymore. |

||

| + | *Simultaneously we are still working on our ice_candidate error. |

||

| + | *We also found out that bacause we run an android application using two connections will be harder than we though in that we will have to use two distinct threads for each connection. otherwise there will be interferences between the two connections leading to major errors blocking the application. |

||

| + | *useful link:https://developer.android.com/reference/android/os/AsyncTask.html. and http://stackoverflow.com/questions/30816325/asynchronous-javascript-calls-from-android-webview |

||

| + | |||

| + | =Week12= |

||

| + | *Just figured our that it is impossible to make any other connection aside from a webRTC connection between two devices already connected with webRTC. |

||

| + | *So we removed all the part with the UDP and http part because it wasn't and wouldn't work |

||

| + | *we also found an error that was fatal in that it raised exceptions and blocked our program. that error was (instead of writing onMessage() we should have written onmessage() |

||

| + | *Now the program runs perfectly with the video flux. we also added a part that sends the motion's data from the phone to the computer using the already existing connection between the two devices with webRTC. |

||

| + | *We just had to create another json object containing the data about the phone's motion information and whenever these ones changes a new json object is created and sent to the computer (the other client). |

||

| + | *downloaded cylon and grot and android that helps to direct the cameras. |

||

| + | *still working on this part of the project. |

||

| + | *also renamed the two html files so that it would meet their real function. |

||

| + | *client.html became server.html (because it is the one that sends the video flux) and the other one was named client.html |

||

| + | *for cylon we got very inspired from this tutorial : https://cylonjs.com |

||

Latest revision as of 15:44, 1 April 2017

Team

ZENNOUCHE Douria RIVOAL Alice

Teacher: DONSEZ Didier RICM4

Product functions:

Our product is the software that makes the link between the robot and the user (the person wearing the googleVR). this software includes a set of functionalities: First of all it makes the connexion between the phone and the robot (what we call in computer science the client and the server). Then the user can explore the space in which the robot is in by moving his head in whichever direction he wants. this kind of motion will create a request that will be sent to the robot and make the camera move in the same direction as the user’s head.

General constraints:

In order to work correctly, this software has to be run with google Chrome or android because of the technologies that it uses such as webRTC that is not compatible with any other technologies aside from these two.

week1:

We chose our project and got the needed material from fablab ( cameras and the googleVR).

week2:

- we did the UML of our project.

- we checked zhao zilong's github in which we found his and hammouti's code.

- we Started to look through their code.

- we watched tutorials about java script (alice) and webRTC's(douria)

week3:

- we carried on with last year student's project.

- contacted last year's students for an eventual meeting, and the following week was decided for the meeting

- watched tutorials about web technologies and java script.

Week4

- We met zilong zhao and guillaume hammouti the ones who did the same project last year, They helped us run their code.

- They explained to us what each of their folders does:

- websocket_server is the folder in which there is the server that must be run in the first place.

- cs is the folder that contains the html of the two clients(the one connected with the two cameras and the one that receives the image stream).

- Stereo and video are of no use according to zhao and guillaume.

- Datachannel is the folder that contains the software in charge of redirecting the cameras in the same direction as the occulus rift: the data about the occulus's position is sent through port 5000 So they just collected the information from port 5000.

Finally we run the software that worked perfectly.

Week5

added a code that allows the program to run on our mobile phone.

- We added a file in cs folder so that all we have to do to run the program on our phone was to type : 192.168.43.253:5000/server.html and the html program runs correctly.

Week6

tried to simplify our architecture and use only one computer to run the client and server on the same device. We soon realised that with http we won't be able to achieve our goal because of security matters => searching how to convert http to https.

Week7

<holidays>

Week8

we went to fablab to get our material's scheme in order to print it out again. and also get some help for the https conversion

Week9

- Once again we went to fablab and got a code to generate security certificate.

- to secure the connection between the diferent actors of the project we first created ssl folder in css and uploaded two files that we run one after another.

- the first one generated a certificate that had to be downloaded by each other device involved in the connection(the phone).

- for the computer (that is also a server). We added this certificate to chrome by following these steps:Parametres -> Afficher parametres avancés ->in HTTPS (gerer les certificats)

- we also modified the httpserver (express) so that it would use https instead of http so that the whole code would be compatible.

- For this part because most of the methods and functions used in our project were not available anymore we had to update the code. and replace some of the methods with more recent ones.

- Finally we fixed our material.

Week10

- Implemented the part in which we collect the phone's motion data. (this part runs perfectly). to test it at this stage of the program we used alerts instead of the console.log because the data remains in the phone.

- still working on the code's upgrade

- still need to send the phone's motion information back to the server to pilot our cameras.

Week11

- We started to code an http server to record the phone's position on the computer. But we realised very soon that with the certificate that we made at first ( when we switched from http to https) this method won't work.

- Instead of using HTTP we decided to use udp(using:https://developer.chrome.com/apps/socket#method-read").

- but when we tested our program we realised that the functions are not up_to_date which means not available anymore.

- Simultaneously we are still working on our ice_candidate error.

- We also found out that bacause we run an android application using two connections will be harder than we though in that we will have to use two distinct threads for each connection. otherwise there will be interferences between the two connections leading to major errors blocking the application.

- useful link:https://developer.android.com/reference/android/os/AsyncTask.html. and http://stackoverflow.com/questions/30816325/asynchronous-javascript-calls-from-android-webview

Week12

- Just figured our that it is impossible to make any other connection aside from a webRTC connection between two devices already connected with webRTC.

- So we removed all the part with the UDP and http part because it wasn't and wouldn't work

- we also found an error that was fatal in that it raised exceptions and blocked our program. that error was (instead of writing onMessage() we should have written onmessage()

- Now the program runs perfectly with the video flux. we also added a part that sends the motion's data from the phone to the computer using the already existing connection between the two devices with webRTC.

- We just had to create another json object containing the data about the phone's motion information and whenever these ones changes a new json object is created and sent to the computer (the other client).

- downloaded cylon and grot and android that helps to direct the cameras.

- still working on this part of the project.

- also renamed the two html files so that it would meet their real function.

- client.html became server.html (because it is the one that sends the video flux) and the other one was named client.html

- for cylon we got very inspired from this tutorial : https://cylonjs.com