Difference between revisions of "Sign2Text"

Aitan.Viegas (talk | contribs) |

Aitan.Viegas (talk | contribs) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | =Introduction= |

||

| − | =The team= |

||

| + | ==Purpose of the requirements document== |

||

| − | *Tutor : Nicolas Vuillerme, Didier Donsez |

||

| + | This document includes the description of features design. |

||

| − | *Members : Aitan Pontes VIEGAS, Valéria Priscilla MONTEIRO FERNANDES |

||

| − | *Department : RICM 4, Polytech Grenoble |

||

| + | ==Scope of the product== |

||

| − | =Project objectives= |

||

| + | Even with the substantial progress achieved with the emergence of sign languages, the integration of people with hearing loss society still finds obstacles. Mainly because most people do not know this language thus becomes necessary to improve and facilitate this integration. Thus, this project aims to design a system that can translate sign language Parle complete (LPC) in a textual language. This will make communication possible between speakers and non-speakers of the LPC, without the need of interpreters. |

||

| − | The objective of this project is to design a system that can translate sign language Parlé Completé into text. |

||

| + | ==Definitions, acronyms and abbreviations== |

||

| − | =Technologies used= |

||

| − | + | LPC - Language Parlé Complété |

|

| + | The LPC is a manual code around the face supplemented lipreading. |

||

| + | ==References== |

||

| − | =Material= |

||

| + | http://software.intel.com/en-us/vcsource/tools/perceptual-computing-sdk |

||

| − | *Creative Intel Developer Kit. |

||

| − | = |

+ | ==Overview of the remainder of the document== |

| + | The rest of the document contains technical characteristics of the project’s development, and descriptions of other scenarios in which it can be used. |

||

| − | ==WEEK 1: from January 14th to January 20th== |

||

| − | *Meeting with tutors ( Nicolas Vuillerme, Alessandro Semere) for requirements extraction. |

||

| − | *Definition of project objectives. |

||

| − | *Search related work. |

||

| + | =General description= |

||

| − | ==WEEK 2: from January 21st to January 27th== |

||

| + | ==Product perspective== |

||

| − | *Installing the development environment using Microsoft's Kinect SDK. |

||

| + | The system will be used by people that have hearing loss. They will translate sign language to language text with it. |

||

| − | *Research on programming using the Microsoft Kinect SDK. |

||

| − | *Research works using the Kinect for gesture recognition. |

||

| − | |||

| − | ==WEEK 3: from January 28th to February 3rd== |

||

| − | *Writing use case diagram. |

||

| − | *Study on sign language Parlé completé. |

||

| + | ==Product functions== |

||

| − | ==WEEK 4: from January 4th to February 10th== |

||

| + | Translate sign language. |

||

| − | *Change the material used for Creative Intel Developer Kit. |

||

| − | *Research on related work using Creative Intel Developer Kit. |

||

| − | *Installing the development environment. |

||

| − | *Research on programming to Intel Perceptual Computing Kit. |

||

| + | ==User characteristics== |

||

| + | The user should know the sign language Parlé Complété. |

||

| + | ==General constraints== |

||

| − | ==WEEK 5: from February 11th to February 17th== |

||

| − | *Writing of UML diagrams. |

||

| − | *Writing the Software Requirements Specification (SRS) |

||

| + | ==Assumptions and dependencies== |

||

| − | ==Related documents== |

||

| + | |||

| + | =Specific requirements, covering functional, non-functional and interface requirements= |

||

| + | |||

| + | |||

| + | ==Requirement X.Y.Z (in Structured Natural Language)== |

||

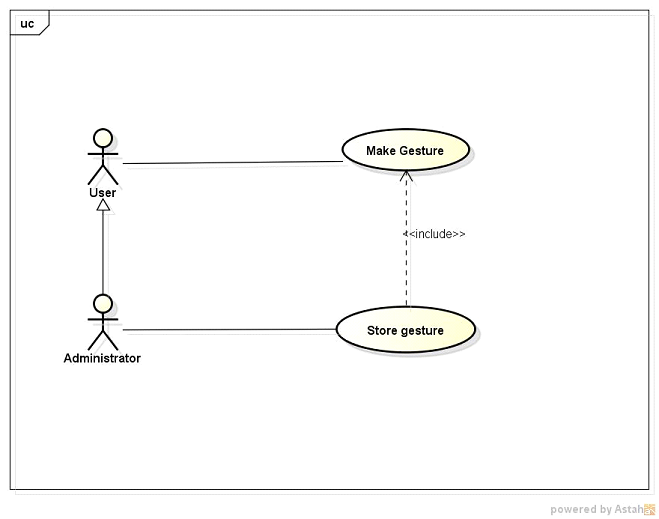

| + | [[File:UseCase.png]] |

||

| + | ===Make gestures=== |

||

| + | *Description: This use case is responsible for capturing the gestures made by the user and translating it into the corresponding text. |

||

| + | |||

| + | *Inputs: Gestures. |

||

| + | *Outputs: Text. |

||

| + | *Destination: User/System. |

||

| + | *Action: Show text corresponding to gestures. |

||

| + | *Pre-condition: Calibrate position. |

||

| + | |||

| + | ===Store gestures=== |

||

| + | *Description: This use case is responsible for storing news gestures in the database. |

||

| + | |||

| + | *Inputs: Gestures/Text. |

||

| + | *Destination: System. |

||

| + | *Action: Save news gestures in the database. |

||

| + | *Pre-condition: Make gestures. |

||

| + | |||

| + | ==Requirements Non-Functional== |

||

| + | |||

| + | *Performance Requiriment |

||

| + | *Description: The system should make the recognition of gestures in a satisfactory time for the user. The time should be less than 5 seconds. |

||

| + | |||

| + | =Product evolution= |

||

| + | =Appendices= |

||

| + | =Specification= |

||

| + | =Sources= |

||

| + | =Index= |

||

Latest revision as of 19:05, 16 February 2013

Introduction

Purpose of the requirements document

This document includes the description of features design.

Scope of the product

Even with the substantial progress achieved with the emergence of sign languages, the integration of people with hearing loss society still finds obstacles. Mainly because most people do not know this language thus becomes necessary to improve and facilitate this integration. Thus, this project aims to design a system that can translate sign language Parle complete (LPC) in a textual language. This will make communication possible between speakers and non-speakers of the LPC, without the need of interpreters.

Definitions, acronyms and abbreviations

LPC - Language Parlé Complété The LPC is a manual code around the face supplemented lipreading.

References

http://software.intel.com/en-us/vcsource/tools/perceptual-computing-sdk

Overview of the remainder of the document

The rest of the document contains technical characteristics of the project’s development, and descriptions of other scenarios in which it can be used.

General description

Product perspective

The system will be used by people that have hearing loss. They will translate sign language to language text with it.

Product functions

Translate sign language.

User characteristics

The user should know the sign language Parlé Complété.

General constraints

Assumptions and dependencies

Specific requirements, covering functional, non-functional and interface requirements

Requirement X.Y.Z (in Structured Natural Language)

Make gestures

- Description: This use case is responsible for capturing the gestures made by the user and translating it into the corresponding text.

- Inputs: Gestures.

- Outputs: Text.

- Destination: User/System.

- Action: Show text corresponding to gestures.

- Pre-condition: Calibrate position.

Store gestures

- Description: This use case is responsible for storing news gestures in the database.

- Inputs: Gestures/Text.

- Destination: System.

- Action: Save news gestures in the database.

- Pre-condition: Make gestures.

Requirements Non-Functional

- Performance Requiriment

- Description: The system should make the recognition of gestures in a satisfactory time for the user. The time should be less than 5 seconds.