Fiche: Difference between revisions

Anna.Bruel (talk | contribs) No edit summary |

Anna.Bruel (talk | contribs) No edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

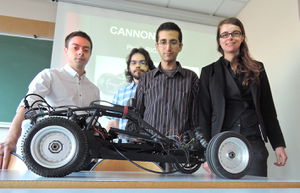

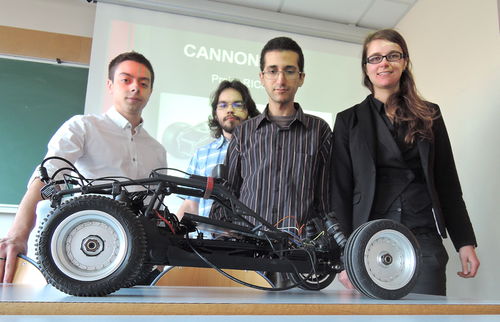

[[Image:Cannonball2015-Team.jpg|300px|thumb|right|Team Cannonball 2015]] |

|||

''' Supervisors: ''' |

|||

* Didier Donsez & Jérome Maisonnasse |

|||

=Preambule= |

|||

''' Students: ''' |

|||

* Bruel Anna |

|||

* Ndiaye Yacine |

|||

*Medewou Cenyo |

|||

The project subject [[CannonBall_de_voitures_autonomes]] |

|||

=Objectif= |

|||

This project is handled from years to years by Polytech and Ensimag Students : |

|||

Avec l'interface OpenHab, les caméras UPnP présentes sur le réseau locale sont détectées automatiquement. Les caméras détectées sont listées dans l'interface OpenHab ou sont mises sous forme de mosaïque. En sélectionnant la caméras dans la liste ou dans la mosaïque, la vidéo de la caméra s'affiche. Si les caméras sont équipées de zoom/tilt/pan, on doit le contrôler à distance depuis OpenHab. Enfin, à la prochaine connection, les caméras sont précédemment enregistrées sont déjà présentes dans la liste. |

|||

'''From january 13th 2014 to march 2nd 2014''' : Jules Legros and Benoit Perruche from Polytech'Grenoble. [http://air.imag.fr/index.php/Proj-2013-2014-Cannonball-de-Voitures-Autonomes Link Wiki Air] |

|||

=Les contraintes technologigues = |

|||

* interface OpenHab : faire un bindings dans OpenHab qui permets d'intégrer les caméras UPnP |

|||

* caméras UPnP |

|||

'''From may 26th 2014 to june 16th 2014''' : Thibaut Coutelou, Benjamin Mugnier and Guillaume Perrin from Ensimag. [http://fablab.ensimag.fr/index.php/Cannonball_de_voitures_RC/FicheSuivi Link] |

|||

=Journal de bord= |

|||

[[Proj-2015-2016-Int%C3%A9gration_de_cam%C3%A9ra_de_surveillance_UPnP_%C3%A0_Openhab | '''Journal de bord''']] |

|||

'''From january 14th 2015 march 2nd 2015''' : Malek Mammar and Opélie Pelloux-Prayer from Polytech'Grenoble. [http://air.imag.fr/index.php/Project-2014-2015-CannonBall Here] |

|||

=Exigences= |

|||

[[Proj-2015-2016-Int%C3%A9gration_de_cam%C3%A9ra_de_surveillance_UPnP_%C3%A0_Openhab/SRS| '''SRS''']] |

|||

This project is based on the work of Jules Legros and Benoit Perruche. |

|||

=Diagramme UML= |

|||

It has also been handled by Thibaut Coutelou, Benjamin Mugnier and Guillaume Perrin. |

|||

=Synthèse du projet= |

|||

==Contexte== |

|||

It is now handled by four Polytech students : Alexandre LE JEAN / Malek MAMMAR / Ophélie PELLOUX-PRAYER / Hugo RODRIGUES |

|||

==Matériel== |

|||

==Logiciels (utilisés)== |

|||

=Project presentation= |

|||

==Logiciels (réalisés)== |

|||

=== Emploi du temps et NFC === |

|||

=== Kinect et Reveals.js === |

|||

= Team = |

|||

=Ressources= |

|||

* Supervisors : Amr Alyafi, Didier Donsez |

|||

* Members : Malek MAMMAR / Ophélie PELLOUX-PRAYER / Alexandre LE JEAN / Hugo RODRIGUES |

|||

* Departement : [http://www.polytech-grenoble.fr/ricm.html RICM 4], [[Polytech Grenoble]] |

|||

=Specifications= |

|||

* take [[OpenCV]] in hands on Windows 8, Android and Ubuntu |

|||

* perform a benchmark [[OpenCV]] for recognition markers on different platforms and different models of cameras |

|||

* achieve a steering algorithm vehicle (trajectory control, control of acceleration, ...) |

|||

* perform a vehicle driving simulator. |

|||

* evaluate the algorithms on vehicles. |

|||

* set up a server for collecting infrastructure vehicle driving parameters |

|||

* propose and implement a consensus algorithm which allows multiple vehicles to travel in convoy maintaining a distance (safety) between them. For this, the speed and location information recorded by vehicles and road radars will be broadcast reliably and in real time between the rolling vehicles on the routes or route segment. |

|||

=Links= |

|||

'''GitHub''' |

|||

[https://github.com/malek0512/2014_2015_ricm4_cannon_ball CannonBall] |

|||

[https://github.com/OpheliePELLOUXPRAYER/ricm4_CannonBall_Simulator CannonBall Simulator] |

|||

'''Documents''' |

|||

[http://air.imag.fr/index.php/Project_2014-2015-CannonBall/developer_guide Developer Guide] |

|||

[http://air.imag.fr/index.php/File:User_manual_cannon_ball_ricm.pdf User manual] |

|||

[http://air.imag.fr/index.php/Project_2014-2015-CannonBall/SRS Software Requirements Specifications] |

|||

[http://air.imag.fr/index.php/Project_2014-2015-CannonBall/UML UML Diagram] |

|||

[http://air.imag.fr/index.php/Project_2014-2015-CannonBall/Scrum Scrum] |

|||

[http://youtu.be/bkoyAkvbdVI video] |

|||

= Progress of the project = |

|||

The project started January 14th, 2015. |

|||

== Week 1 (January 13th - January 18th) == |

|||

* Project discovery |

|||

* Discovery of OpenCV |

|||

* Material recovery |

|||

== Week 2 (January 19th - January 25th) == |

|||

'''Work in software engineering''' [http://air.imag.fr/index.php/Project_2014-2015-CannonBall/SRS Software Requirements Specifications] |

|||

How to set up the project [http://air.imag.fr/index.php/Project_2014-2015-CannonBall/developer_guide Developer Guide] |

|||

''Technology watch'' |

|||

* The idea of using a rasberry pi for images processing was put away because of a lack of cpu power. Pour se donner un ordre d'idée le core i3 de la tablette Lenovo Thinkpad ....a ajouter des chiffre par rapport à la tablette |

|||

* The idea of using a raspberry pi to broadcast the video stream to a local server and processing it by a another machine is not conceivable because of the transmission latency of the stream. To give an order of magnitude if the latency is ~1 second, a car at 30 km/h would carry ~8.3 m before the second machine performs its processing. |

|||

* The idea of using a mini and compact tower (example: GB-XM1-3537) is interesting but the absence of in board batteries makes it dependente to a 220 Volts power source. |

|||

* The idea of using a phone is interesting because of the richness and accuracy of the sensors (accelerometer, gyroscope, ...). Especially if it has a Tegra type processor, offering greater processing performance. Notice that a simple ARM processor would not do it. |

|||

Moreover a phone is small and has onboard battery. |

|||

The main concern of our predecessor was that external cameras were not supported on android system. -> We will check on this, because what was true a year ago, might not be today. |

|||

'' Technologies chosen '' |

|||

* We keep MongoBD database because it offers high write performance and that it is scalable. |

|||

* We keep Node.js for creating a local server through which the tablet can display all the data. We use different modules : |

|||

** socket.io for real time messaging between the client and the server |

|||

** mqtt for a subscribe/pusblish protocol |

|||

** mongoose for manipulating the MongoDB database from JavaScript |

|||

* We keep arUco library which we judge to be powerful enough for our requirements. Plus, it is based on opencv library. |

|||

'' What we have to accomplish '' |

|||

* Finish the prototype in order to be able to present it march 18th, 2015. |

|||

** Redirect the metrics and the webcam stream from the car to any computer accessing to the tablet network |

|||

** Enhance the algorithms of the car. |

|||

** Create a car convoy algorithm. |

|||

'' What we want to do '' |

|||

* Skip to a Linux environnent with a none proprietary IDE to replace Visual Studio 2013 |

|||

* We will stick to C++ language because it is more close of the opencv library which is actually coded in C++ language. |

|||

'' What is expected from us to consider '' |

|||

* Enhense the moves of the mini car. There are two ways to do so : |

|||

** Add a shield (MEMS) to the uno arduino |

|||

** Replace the arduino with a STM32 card which is already equipped with embedded sensors. MEMS (microelectromechanical sensors including accelerometers, gyroscopes, digital compasses, inertial modules, pressure sensors, humidity sensors and microphones). |

|||

** An application with an Oculus device |

|||

'' The risks '' |

|||

* Our ability to take over our predecessors' code, and not going back from scratch |

|||

* Misunderstanding and communication problems amongst us and also with 3i team |

|||

* Arriving to an unrealizable situation due to material choice |

|||

* Reaching a speed limit due to the tablet ability for image processing |

|||

== Week 3 (January 26th - February 01st) == |

|||

'''Work in software engineering''' [http://air.imag.fr/index.php/Project_2014-2015-CannonBall/UML UML] |

|||

''Notice that we have been joined by two colleagues, A.Le Jean and H.Rodriguez.'' |

|||

* New distribution of tasks : two of us will work on the network part (Rodrigues and Mammar) and the two others (Pelloux-Prayer and Le Jean) will work on the optimisation of the opencv use |

|||

* We have managed to compile the code of our predecessors |

|||

* Meeting with 3i team for coordinating our efforts with the aim of gloabale améliioration the project. Three proposals were made from them : |

|||

** Installation of a mechanical arm on the car |

|||

** Make the camera swivel 180 degrees |

|||

** Change the Arduino board with a STM32 (more equipped with sensors) to improve the precision of movement |

|||

** Change the Arduino board with a board created for image processing purpose and evaluate the possible performance gain |

|||

== Week 4 (February 02nd - February 08th) == |

|||

'''Work in software engineering''' [http://air.imag.fr/index.php/Project_2014-2015-CannonBall/Scrum Scrum] |

|||

* We made an optimisation from 3 fps to 15 fps in open use |

|||

== Week 5(February 09th - February 15th) == |

|||

'''Work in software engineering''' [http://air.imag.fr/index.php/Project_2014-2015-CannonBall/DesignPatterns DesignPatterns] |

|||

== Week 6 (February 16th - February 22th) ~Holidays~ == |

|||

''Corrections :'' |

|||

* Arduino : le lancement du programme main engendre directement le reset de la carte arduino à un état prêt pour la réception de données. |

|||

* La partie réseau à bien avancé, les métrics s'affichent en temps réel sur une page html accessible localement sur localhost:1337. Ceci sera adpaté plus tard sur un routeur wifi de type rasperry pi + clé wifi. |

|||

* Ajout de la fonctionnalité : diffusion de la video sur la page html. |

|||

* La tablette est configurée et prête pour un test avec la voiture. |

|||

Remarque : On a remarqué un décalage de performances significatif entre nos machines et la tablette (moins puissante). Ce qui engendre parfois un délai de communication entre l'arduino et la tablette suffisamment important pour un basculement en état d'urgence de la carte arduino. En effet, par mesure préventive l'arduino stop la voiture. Des améliorations sur le code du traitement d'images sont à prévoir. |

|||

''Bugs :'' |

|||

* Bug Mosquitto : sur l'écoute des messages d'un topic, la fonction mosquitto_message_callback_set(...) engendre une exception qui ne peut être encadrée par un bloc try{ } catch (), pour la simple raison que celle-ci est émise par un thread (mosquitto) en parallèle. Comme chaque thread possède sa propre pile on ne peut apparemment pas directement faire un catch() depuis le Main. |

|||

== Week 7 (February 23rd - march 01st) == |

|||

''Bug'' |

|||

* We have noticed an intermittent quick drift of the wheels. |

|||

== Week 8 (March 02nd - march 08th) == |

|||

Meeting with the 3I-Team for STM32-Nucleo portage |

|||

The vehicle simulator part has begun : for this part we've decided to use java language and slick 2d library (http://slick.ninjacave.com/) which is windows and linux compatible. |

|||

== Week 9 (March 09th - march 15th) == |

|||

''Bugs'' |

|||

* We hoped to be able to retrieve data from the tablet captors but it turned out that C# was required. We prefer to put our effort in the Nucleos card portage. |

|||

* We have some issues on turning the raspberry in a router and access point (hostapd doesn't reconize the wifi key driver). |

|||

* We still trying to replace the arduino with the nucleo card. The code made by the 3i team for their Nucleos F304, is not compatible with our Nucleos F030-R8. |

|||

== Week 10 (March 16th - march 22th) == |

|||

We've worked on : |

|||

* the simulator part. Here is a link to the github repository : [https://github.com/OpheliePELLOUXPRAYER/ricm4_CannonBall_Simulator CannonBall Simulator] |

|||

* AISheep which is a new automata able to read a succession of instruction on a single QRcode in goal to reach a destination. |

|||

== Week 11 (March 23th - march 29th) == |

|||

* We corrected the intermittent quick drift of the wheels. The problem was from the Arduino code. |

|||

== Week 12 (March 30th - april 5th) == |

|||

* We've globally optimized the number of fps. For instance on the tablet we have around 14-15 fps with all the settings (mentionned in the section Optimisation of the report) are disabled. |

|||

* We have made some progress in the simulator part : the car receive orders from the c++ AI code, through the mosquitto broker, and moves accordingly to the QRcode set on the simulator. So there a real interaction between the searcher user and the algorithms |

|||

== Day J-2 april, 6th == |

|||

* We've finished to portage to the Nucleos |

|||

* We have noticed some issues between the java simulator and the c++ main program communication through mosquitto pub/sub protocol. It appears that in both modes simulator (described below), the java code receives only the first messages published from the c++ and then it stops. Moreover I've met the same problems with the c++ part when I've created a sub class from mosquitto instead of directly using an instance of mosquitto class. |

|||

There are two simlator mode : |

|||

* The first one, consist on replacing the car with a graphical windows and the micro controller with mosquitto messaging since the micro controller was just translating the speed and steering to pulsewidth. |

|||

This mode is abstracting us from the need of a car. It is for testing the image processing and the artificial intelligence without technical material. |

|||

* This second mode is like the first. But it also replaces the image processing with the ability to set Qrcode on the graphical windows. The Java simulator sends coordinates to the c++ main program every time a Qrcode is add or deleted. This way of doing has not been tested but shoulb be compatible with AISheep automata. |

|||

This mode is abstracting us from the need of a car and the image processing. It is for testing the artificial intelligence. |

|||

== Day J-1 april, 7th == |

|||

== Day J-0 april, 8th == |

|||

=Gallery= |

|||

[[Image:Cannonball2015-Team.jpg|500px|Team Cannonball 2015]][[Image:Cannonball2015-Test1.jpg|500px|Cannonball 2015]][[Image:Cannonball2015-Test2.jpg|500px|Cannonball 2015]] |

|||

Latest revision as of 10:51, 1 February 2016

Preambule

The project subject CannonBall_de_voitures_autonomes

This project is handled from years to years by Polytech and Ensimag Students :

From january 13th 2014 to march 2nd 2014 : Jules Legros and Benoit Perruche from Polytech'Grenoble. Link Wiki Air

From may 26th 2014 to june 16th 2014 : Thibaut Coutelou, Benjamin Mugnier and Guillaume Perrin from Ensimag. Link

From january 14th 2015 march 2nd 2015 : Malek Mammar and Opélie Pelloux-Prayer from Polytech'Grenoble. Here

This project is based on the work of Jules Legros and Benoit Perruche.

It has also been handled by Thibaut Coutelou, Benjamin Mugnier and Guillaume Perrin.

It is now handled by four Polytech students : Alexandre LE JEAN / Malek MAMMAR / Ophélie PELLOUX-PRAYER / Hugo RODRIGUES

Project presentation

Team

- Supervisors : Amr Alyafi, Didier Donsez

- Members : Malek MAMMAR / Ophélie PELLOUX-PRAYER / Alexandre LE JEAN / Hugo RODRIGUES

- Departement : RICM 4, Polytech Grenoble

Specifications

- take OpenCV in hands on Windows 8, Android and Ubuntu

- perform a benchmark OpenCV for recognition markers on different platforms and different models of cameras

- achieve a steering algorithm vehicle (trajectory control, control of acceleration, ...)

- perform a vehicle driving simulator.

- evaluate the algorithms on vehicles.

- set up a server for collecting infrastructure vehicle driving parameters

- propose and implement a consensus algorithm which allows multiple vehicles to travel in convoy maintaining a distance (safety) between them. For this, the speed and location information recorded by vehicles and road radars will be broadcast reliably and in real time between the rolling vehicles on the routes or route segment.

Links

GitHub

Documents

Software Requirements Specifications

Progress of the project

The project started January 14th, 2015.

Week 1 (January 13th - January 18th)

- Project discovery

- Discovery of OpenCV

- Material recovery

Week 2 (January 19th - January 25th)

Work in software engineering Software Requirements Specifications

How to set up the project Developer Guide

Technology watch

- The idea of using a rasberry pi for images processing was put away because of a lack of cpu power. Pour se donner un ordre d'idée le core i3 de la tablette Lenovo Thinkpad ....a ajouter des chiffre par rapport à la tablette

- The idea of using a raspberry pi to broadcast the video stream to a local server and processing it by a another machine is not conceivable because of the transmission latency of the stream. To give an order of magnitude if the latency is ~1 second, a car at 30 km/h would carry ~8.3 m before the second machine performs its processing.

- The idea of using a mini and compact tower (example: GB-XM1-3537) is interesting but the absence of in board batteries makes it dependente to a 220 Volts power source.

- The idea of using a phone is interesting because of the richness and accuracy of the sensors (accelerometer, gyroscope, ...). Especially if it has a Tegra type processor, offering greater processing performance. Notice that a simple ARM processor would not do it.

Moreover a phone is small and has onboard battery. The main concern of our predecessor was that external cameras were not supported on android system. -> We will check on this, because what was true a year ago, might not be today.

Technologies chosen

- We keep MongoBD database because it offers high write performance and that it is scalable.

- We keep Node.js for creating a local server through which the tablet can display all the data. We use different modules :

- socket.io for real time messaging between the client and the server

- mqtt for a subscribe/pusblish protocol

- mongoose for manipulating the MongoDB database from JavaScript

- We keep arUco library which we judge to be powerful enough for our requirements. Plus, it is based on opencv library.

What we have to accomplish

- Finish the prototype in order to be able to present it march 18th, 2015.

- Redirect the metrics and the webcam stream from the car to any computer accessing to the tablet network

- Enhance the algorithms of the car.

- Create a car convoy algorithm.

What we want to do

- Skip to a Linux environnent with a none proprietary IDE to replace Visual Studio 2013

- We will stick to C++ language because it is more close of the opencv library which is actually coded in C++ language.

What is expected from us to consider

- Enhense the moves of the mini car. There are two ways to do so :

- Add a shield (MEMS) to the uno arduino

- Replace the arduino with a STM32 card which is already equipped with embedded sensors. MEMS (microelectromechanical sensors including accelerometers, gyroscopes, digital compasses, inertial modules, pressure sensors, humidity sensors and microphones).

- An application with an Oculus device

The risks

- Our ability to take over our predecessors' code, and not going back from scratch

- Misunderstanding and communication problems amongst us and also with 3i team

- Arriving to an unrealizable situation due to material choice

- Reaching a speed limit due to the tablet ability for image processing

Week 3 (January 26th - February 01st)

Work in software engineering UML

Notice that we have been joined by two colleagues, A.Le Jean and H.Rodriguez.

- New distribution of tasks : two of us will work on the network part (Rodrigues and Mammar) and the two others (Pelloux-Prayer and Le Jean) will work on the optimisation of the opencv use

- We have managed to compile the code of our predecessors

- Meeting with 3i team for coordinating our efforts with the aim of gloabale améliioration the project. Three proposals were made from them :

- Installation of a mechanical arm on the car

- Make the camera swivel 180 degrees

- Change the Arduino board with a STM32 (more equipped with sensors) to improve the precision of movement

- Change the Arduino board with a board created for image processing purpose and evaluate the possible performance gain

Week 4 (February 02nd - February 08th)

Work in software engineering Scrum

- We made an optimisation from 3 fps to 15 fps in open use

Week 5(February 09th - February 15th)

Work in software engineering DesignPatterns

Week 6 (February 16th - February 22th) ~Holidays~

Corrections :

- Arduino : le lancement du programme main engendre directement le reset de la carte arduino à un état prêt pour la réception de données.

- La partie réseau à bien avancé, les métrics s'affichent en temps réel sur une page html accessible localement sur localhost:1337. Ceci sera adpaté plus tard sur un routeur wifi de type rasperry pi + clé wifi.

- Ajout de la fonctionnalité : diffusion de la video sur la page html.

- La tablette est configurée et prête pour un test avec la voiture.

Remarque : On a remarqué un décalage de performances significatif entre nos machines et la tablette (moins puissante). Ce qui engendre parfois un délai de communication entre l'arduino et la tablette suffisamment important pour un basculement en état d'urgence de la carte arduino. En effet, par mesure préventive l'arduino stop la voiture. Des améliorations sur le code du traitement d'images sont à prévoir.

Bugs :

- Bug Mosquitto : sur l'écoute des messages d'un topic, la fonction mosquitto_message_callback_set(...) engendre une exception qui ne peut être encadrée par un bloc try{ } catch (), pour la simple raison que celle-ci est émise par un thread (mosquitto) en parallèle. Comme chaque thread possède sa propre pile on ne peut apparemment pas directement faire un catch() depuis le Main.

Week 7 (February 23rd - march 01st)

Bug

- We have noticed an intermittent quick drift of the wheels.

Week 8 (March 02nd - march 08th)

Meeting with the 3I-Team for STM32-Nucleo portage

The vehicle simulator part has begun : for this part we've decided to use java language and slick 2d library (http://slick.ninjacave.com/) which is windows and linux compatible.

Week 9 (March 09th - march 15th)

Bugs

- We hoped to be able to retrieve data from the tablet captors but it turned out that C# was required. We prefer to put our effort in the Nucleos card portage.

- We have some issues on turning the raspberry in a router and access point (hostapd doesn't reconize the wifi key driver).

- We still trying to replace the arduino with the nucleo card. The code made by the 3i team for their Nucleos F304, is not compatible with our Nucleos F030-R8.

Week 10 (March 16th - march 22th)

We've worked on :

- the simulator part. Here is a link to the github repository : CannonBall Simulator

- AISheep which is a new automata able to read a succession of instruction on a single QRcode in goal to reach a destination.

Week 11 (March 23th - march 29th)

- We corrected the intermittent quick drift of the wheels. The problem was from the Arduino code.

Week 12 (March 30th - april 5th)

- We've globally optimized the number of fps. For instance on the tablet we have around 14-15 fps with all the settings (mentionned in the section Optimisation of the report) are disabled.

- We have made some progress in the simulator part : the car receive orders from the c++ AI code, through the mosquitto broker, and moves accordingly to the QRcode set on the simulator. So there a real interaction between the searcher user and the algorithms

Day J-2 april, 6th

- We've finished to portage to the Nucleos

- We have noticed some issues between the java simulator and the c++ main program communication through mosquitto pub/sub protocol. It appears that in both modes simulator (described below), the java code receives only the first messages published from the c++ and then it stops. Moreover I've met the same problems with the c++ part when I've created a sub class from mosquitto instead of directly using an instance of mosquitto class.

There are two simlator mode :

- The first one, consist on replacing the car with a graphical windows and the micro controller with mosquitto messaging since the micro controller was just translating the speed and steering to pulsewidth.

This mode is abstracting us from the need of a car. It is for testing the image processing and the artificial intelligence without technical material.

- This second mode is like the first. But it also replaces the image processing with the ability to set Qrcode on the graphical windows. The Java simulator sends coordinates to the c++ main program every time a Qrcode is add or deleted. This way of doing has not been tested but shoulb be compatible with AISheep automata.

This mode is abstracting us from the need of a car and the image processing. It is for testing the artificial intelligence.