Apache Flink: Difference between revisions

Jump to navigation

Jump to search

| Line 77: | Line 77: | ||

==Cluster execution== |

==Cluster execution== |

||

===Amazon AWS EC2=== |

===Amazon AWS EC2=== |

||

https://ci.apache.org/projects/flink/flink-docs-release-1.0/setup/aws.html |

|||

Revision as of 04:45, 16 September 2016

Apache Flink® is an open source platform for distributed stream and batch data processing. Flink’s core is a streaming dataflow engine that provides data distribution, communication, and fault tolerance for distributed computations over data streams.

Getting started

Installation

wget http://www.apache.org/dyn/closer.lua/flink/flink-1.1.2/flink-1.1.2-bin-hadoop27-scala_2.11.tgz tar xf flink-1.1.2-bin-hadoop27-scala_2.11.tgz FLINK_HOME=~/flink-1.1.2 cd $FLINK_HOME ls bin ls examples

Local Execution

Terminal 1: start Flink

cd $FLINK_HOME bin/start-local.sh

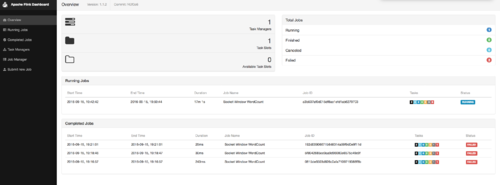

Open the UI http://localhost:8081/#/overview

Run the SocketWindowWordCount example (source).

Terminal 2: Start netcat

nc -l 9000

Terminal 3: Submit the Flink program:

cd $FLINK_HOME bin/flink run examples/streaming/SocketWindowWordCount.jar --port 9000

Terminal 2: Add words in netcat input

lorem ipsum ipsum ipsum ipsum bye

Terminal 4:

cd $FLINK_HOME tail -f log/flink-*-jobmanager-*.out

Terminal 1: stop Flink

cd $FLINK_HOME bin/stop-local.sh

Shell

cd $FLINK_HOME bin/start-scala-shell.sh local

TBC

Cluster execution

Amazon AWS EC2

https://ci.apache.org/projects/flink/flink-docs-release-1.0/setup/aws.html