Proj-2013-2014-BrasRobot-Handicap-1: Difference between revisions

| Line 49: | Line 49: | ||

Knowing that we can retrieve the coordinates x, y, z of the marker, we expect, following our discussion with the group system, to transcribe these data into a text file they will use. The advantage of such a solution is that if the solution proposed by the system group is not practical, we can always use this file to prove the proper functioning of our solution and use it to find another alternative. |

Knowing that we can retrieve the coordinates x, y, z of the marker, we expect, following our discussion with the group system, to transcribe these data into a text file they will use. The advantage of such a solution is that if the solution proposed by the system group is not practical, we can always use this file to prove the proper functioning of our solution and use it to find another alternative. |

||

In the meantime we do our first live detection tests. The first results are rather conclusive but now we will have to determine several scenarios that may happen. |

In the meantime we do our first live detection tests. The first results are rather conclusive but now we will have to determine several scenarios that may happen. |

||

[[File:MarkersLive.png|200px|thumb| |

[[File:MarkersLive.png|200px|thumb|center|MarkersLive]] |

||

Revision as of 16:59, 11 February 2014

Description and Goals

The long-term goal of this project is to develop a control software manipulator arm for support of persons with disabilities.

Team

- Supervisor: Olivier Richard

- El Hadji Malick FALL, Adji Ndèye Ndaté SAMBE

- Department : RICM 4, Polytech Grenoble

Documents

The Software Requirements Specification (SRS) can be found here

Steps

The projet was attributed on Monday 13th and started the following day January 14th, 2014

Week 1 (January 13th - Janurary 19th)

- Project discovery

- Meeting with the different members of the project for assigning roles. Each pair chooses the part of the project that it wants to process.We choose to process the part on the detection of markers.

- Research on marker detection

Week 2 (January 20th - Janurary 26th)

- Test configuration of the robot

- Learning of C++

- Discovery of OpenCV

In details, we looked at the record and the code of the former group and studied the methods they proposed for the markers detection including hough transform and harritraining. Then we make some researchs to know how OpenCv works. One of the question is what will be the criteria of the markers : color, shape of both.

Week 3 (January 27th - February 2nd)

- Discovery of ArUco

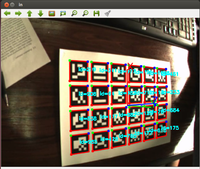

ArUco is a minimal library for Augmented Reality applications based on OpenCv. One of its features of ArUco is Detect markers with a single line of C++ code. This will be very useful for our approach. As it is described in the source forge website, each marker has an unique code indicated by the black and white colors in it. The library detect borders, and analyzes into the rectangular regions which of them are likely to be markers. Then, a decoding is performed and if the code is valid, it is considered that the rectangle is a marker. So our next step will be to choose what kind of marker we will use and define the various steps of the recovery of the marker by the robot. We also start to learn Python langage which will be used to develop ArUco solution. Installing PyDev requires much more time as we though. After successfull completion, we are now developing our own solutions in Python inspired by ArUco's.

Week 4 (February 3rd- February 9th)

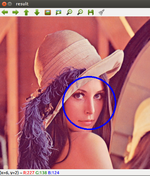

As we said, our own solutions are on going process. We expect that in this week they will be completed and fortunately we managed to make our first tests with ArUco. We have experienced a solution with black and white markers with ArUco, and a second with a face detection with OpenCV. The next step is now to be able to test it on video to first measure the efficiency and then take back the coordinates of marker's position that will be interesting for the system group.

Week 5 (February 10rd- February 16th)

After a long trying, we have finally succeed to detect several markers contained in a jpeg picture and avi video. We are also able to get back the space coordinates of the marker. We could write it in a file that we will be used by the system team. Our next step is now to detect markers in a live video.

But even if Aruco solution is functional now, we can consider encounter some difficulties. For that OpenCV is still an optional solution. We have already made contact with the Canonball group about it. Knowing that we can retrieve the coordinates x, y, z of the marker, we expect, following our discussion with the group system, to transcribe these data into a text file they will use. The advantage of such a solution is that if the solution proposed by the system group is not practical, we can always use this file to prove the proper functioning of our solution and use it to find another alternative. In the meantime we do our first live detection tests. The first results are rather conclusive but now we will have to determine several scenarios that may happen.