Proj-2013-2014-Sign2Speech-English: Difference between revisions

No edit summary |

|||

| (28 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

== Objective == |

== Objective == |

||

The goal of |

The goal of the project is to allow deaf people to communicate over the Internet with persons who don't understand sign language. The program should be able to understand sign langage in order to obey to orders given. Then orders should be displayed under literal form and transformed into vocal signal thanks to a speech synthesis technology. |

||

The |

The program should also be able to learn new hand movements to increase its database to recognize more ideas. |

||

[[Proj-2013-2014-Sign2Speech-SRS| '''Software Requirements Specification''']] |

[[Proj-2013-2014-Sign2Speech-SRS| '''Software Requirements Specification''']] |

||

[[Media:S2S-Flyer.pdf|'''Flyer-S2S''']] |

|||

== The Team == |

|||

*Tutor : Didier Donsez |

*Tutor : Didier Donsez |

||

* |

*Author: Patrick PEREA |

||

*Department : [http://www.polytech-grenoble.fr/ricm.html RICM 4], [[Polytech Grenoble]] |

*Department : [http://www.polytech-grenoble.fr/ricm.html RICM 4], [[Polytech Grenoble]] |

||

| Line 28: | Line 28: | ||

== Tools == |

== Tools == |

||

We will use two different technologies : |

|||

*[https://www.leapmotion.com/ Leap Motion] |

|||

| ⚫ | |||

The Leap motion is a device that allow you to control your computer with your hands. But there is no physical contact and the communication with the computer is based on hand movements. You put the Leap motion under your hands, next to the keyboard. |

|||

Compared to the Kinect, it is much smaller. The device has a size of 8 x 2,9 x 1,1 cm and a frame repetition rate of 200 Hz (the Kinect has a 30 Hz frame repetition rate). The Leap motion is composed of two webcams of 1,3 MP which film in stereoscopy and three infra-red light LED. It can detect the position of all ten fingers. |

|||

The official website has a section for [https://developer.leapmotion.com/ developer]. You can download the SDK 1.0 (almost 47 Mo) which contains API for following langages: C++, C#, Java, Python, Objective C and JavaScript. The SDK also contains examples for libraries and functions of the SDK. |

|||

| ⚫ | |||

[[File:creative.jpeg|300px|right|Map]] |

[[File:creative.jpeg|300px|right|Map]] |

||

| ⚫ | |||

This camera is also a remote controller for the computer. You put it in front of people. |

This camera is also a remote controller for the computer. You put it in front of people. |

||

| Line 47: | Line 36: | ||

This camera films at around 30 fps in 720p. |

This camera films at around 30 fps in 720p. |

||

Intel provides also a SDK for developers : [http://software.intel.com/en-us/vcsource/tools/perceptual-computing-sdk]. Some of the libraries will help |

Intel provides also a SDK for developers : [http://software.intel.com/en-us/vcsource/tools/perceptual-computing-sdk SDK]. Some of the libraries will help for hand and fingers tracking and facial recognition. |

||

== Gallery == |

== Gallery == |

||

| Line 54: | Line 43: | ||

= Work in progress = |

= Work in progress = |

||

We received the subject of the project the 21 of January (2014). This part will resume our progression. |

|||

== Week 1 (January 27 - February 2) == |

== Week 1 (January 27 - February 2) == |

||

| Line 67: | Line 54: | ||

== Week 2 (February 3 - February 9) == |

== Week 2 (February 3 - February 9) == |

||

| ⚫ | |||

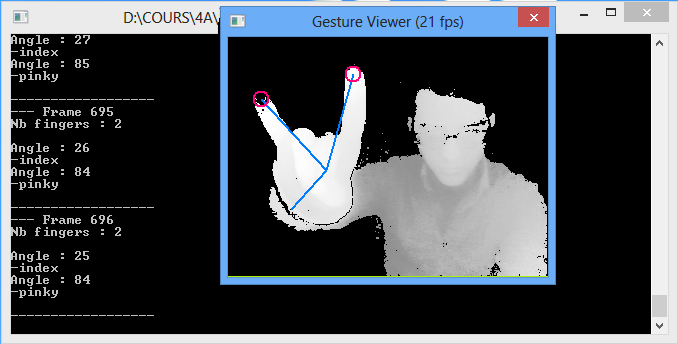

* Implementation of an "overlay" for the SDK to have a better fingers recognition. The goal is to know which finger the user is showing to the camera. |

* Implementation of an "overlay" for the SDK to have a better fingers recognition. The goal is to know which finger the user is showing to the camera. |

||

* Correction of the bug : same finger dectected more than once. |

* Correction of the bug : same finger dectected more than once. |

||

| Line 75: | Line 62: | ||

* Definition of gestures made of symbols in sign language. |

* Definition of gestures made of symbols in sign language. |

||

* Choice of our data structure for symbols and gestures. When the user is doing a symbol with his hands, the program will look into symbols table if it exists. Therefore, the search should be efficient and quick to keep the real-time aspect. Symbols table can have more than hundred symbols. |

* Choice of our data structure for symbols and gestures. When the user is doing a symbol with his hands, the program will look into symbols table if it exists. Therefore, the search should be efficient and quick to keep the real-time aspect. Symbols table can have more than hundred symbols. |

||

| ⚫ | |||

== Week 3 (February 10 - February 16) == |

== Week 3 (February 10 - February 16) == |

||

* Implementation of data structures and dichotomous algorithm (complexity O(log n)) |

* Implementation of data structures and dichotomous algorithm (complexity O(log n)) |

||

* Implementation of others search algorithm. Then we will compare times between all algorithm to choose ours. |

* Implementation of others search algorithm. Then we will compare times between all algorithm to choose ours. |

||

* Search of a |

* Search of a world [http://www.signingsavvy.com/ dictionnary] for sign language. |

||

* "Skype"-like program implementation beginning which will allow a video communication between users on the network. Then, our program will permit yourself to communicate between a mute user and not-mute user. The textual translation will be send to the not-mute user and display on his screen. |

* "Skype"-like program implementation beginning which will allow a video communication between users on the network. Then, our program will permit yourself to communicate between a mute user and not-mute user. The textual translation will be send to the not-mute user and display on his screen. |

||

* First draw of program interface |

* First draw of program interface |

||

| Line 93: | Line 82: | ||

A first problem can be detected : the speed of picture processing. Shape recognition as hand contour is slower than with Intel technology (with depth sensor). |

A first problem can be detected : the speed of picture processing. Shape recognition as hand contour is slower than with Intel technology (with depth sensor). |

||

A second matter can be observed : hand position. If you want your hand be recognized, it must not be over user face. Hands and face have then same skin color so contours are not really detected. |

A second matter can be observed : hand position. If you want your hand be recognized, it must not be over user face. Hands and face have then same skin color so contours are not really detected. |

||

To have a good detection, the hand must be next to the face, in front of another coloured background. Constraints are too heavy to use this technology. We |

To have a good detection, the hand must be next to the face, in front of another coloured background. Constraints are too heavy to use this technology. We stay on Intel technology. |

||

== Week 5 (February 24 - March 2) == |

|||

* Implementation of movements in our gesture recognition algorithm : thanks to mathematical equations, we can now detect rectilinear, elliptical movements or say if the user doesn't move. |

|||

* Symbol recognition "closed fist", that we can find in many gestures. |

|||

* Discussion about : how save the dictionnary in user HDD. We choose an XML file. |

|||

* Implementation of encryption mecanism to prevent the user to see the XML file. Indead, this file shows how our program is functionning. |

|||

== Week 6 (March 3 - March 9) == |

|||

| ⚫ | |||

* Implementation of user interface for our "Skype"-like program. |

|||

* Encryption mecanism available |

|||

* Creation of a program to try to send image data thanks to different methods |

|||

* Writing of a complete report with technical details |

|||

* Adding ''I LOVE YOU'' in the dictionnary |

|||

* Matter to integrate interface with our recognition program did past weeks. A problem between the thread "interface" and thread "motor". |

|||

== Week 7 (March 10 - March 16) == |

|||

* Mid-term oral presentation |

|||

* Integration of Thread Interface with Thread motor. |

|||

== Week 8 (March 10 - March 23) == |

|||

* Adding '''AIRPLANE''' in the dictionnary |

|||

* Adding '''ALL''' in the dictionnary |

|||

* Creation of the dictionnary in XML |

|||

* Integration of XML parser in the program and the gesture tree is built thanks to this dictionnary. |

|||

== Week 9 (March 24 - March 30) == |

|||

* Adding the new feature : '''vocal synthesis''' |

|||

* Adding '''UP''' in the dictionnary |

|||

* Adding '''ACCIDENT''' in the dictionnary |

|||

* Adding '''OBSTINATE''' in the dictionnary |

|||

* Correcting bugs when adding nodes in the movement tree |

|||

* Correcting bugs in Machine learning |

|||

== Week 10 (March 31 - April 06) == |

|||

* Preparation of final oral |

|||

* Flyers creation |

|||

* Writing of developper manual |

|||

Latest revision as of 20:36, 21 October 2014

Objective

The goal of the project is to allow deaf people to communicate over the Internet with persons who don't understand sign language. The program should be able to understand sign langage in order to obey to orders given. Then orders should be displayed under literal form and transformed into vocal signal thanks to a speech synthesis technology.

The program should also be able to learn new hand movements to increase its database to recognize more ideas.

Software Requirements Specification

- Tutor : Didier Donsez

- Author: Patrick PEREA

- Department : RICM 4, Polytech Grenoble

State of the Art

Recognition of sign langage alphabet

Recognition of particular hand movements which mean ideas (ZCam camera)

Recognition of particular hand movements which mean ideas, and the traduction in spanish (OpenCV)

Recognition of particular hand movements which mean ideas (Kinect)

Tools

We will use a special kind of camera, the Intel-Creative camera with Intel® Perceptual Computing SDK

This camera is also a remote controller for the computer. You put it in front of people.

The Creative is provided of depth recognition, which enable developer to do the difference between shots. This camera films at around 30 fps in 720p.

Intel provides also a SDK for developers : SDK. Some of the libraries will help for hand and fingers tracking and facial recognition.

Gallery

Work in progress

Week 1 (January 27 - February 2)

- Discovery of Intel Creative camera

- Discovery and getting used to Intel SDK

- Choice of programming language (C++)

- First program of fingers recognition

- First matter : Intel SDK is not finished. It gives us methods for fingertips, writs and hand center recognition. But, it can not make the difference between each fingers.

- First bug detection with the camera : for each picture given by the depth sensor, a same finger can be detected more than once.

Week 2 (February 3 - February 9)

- Implementation of an "overlay" for the SDK to have a better fingers recognition. The goal is to know which finger the user is showing to the camera.

- Correction of the bug : same finger dectected more than once.

- Getting in contact with Intel through its developer forum.

- Our camera may have an hardware problem which gives us turbulence on depth sensor picture. It remains to do more tests.

- Adding of hand calibrator function (implementation not finished)

- Definition of gestures made of symbols in sign language.

- Choice of our data structure for symbols and gestures. When the user is doing a symbol with his hands, the program will look into symbols table if it exists. Therefore, the search should be efficient and quick to keep the real-time aspect. Symbols table can have more than hundred symbols.

Week 3 (February 10 - February 16)

- Implementation of data structures and dichotomous algorithm (complexity O(log n))

- Implementation of others search algorithm. Then we will compare times between all algorithm to choose ours.

- Search of a world dictionnary for sign language.

- "Skype"-like program implementation beginning which will allow a video communication between users on the network. Then, our program will permit yourself to communicate between a mute user and not-mute user. The textual translation will be send to the not-mute user and display on his screen.

- First draw of program interface

Week 4 (February 10 - February 23)

- First gesture recognition and display of its textual translation "Africa".

- Matter : Since few years, Microsoft doesn't allow people to create skype plugins anymore. We wanted to integrate our functionnality Sign2Speech in Skype. So we direct ourselves in the development of "stand-alone" software.

- Discovery of OpenCV technology to replace the Creative camera because of turbulences :

Advantages : We can use any camera for hand recognition. Camera in every laptops should be fine. Then, users don't need to buy an Intel Creative camera to use our program.

Disadvantages : A first problem can be detected : the speed of picture processing. Shape recognition as hand contour is slower than with Intel technology (with depth sensor). A second matter can be observed : hand position. If you want your hand be recognized, it must not be over user face. Hands and face have then same skin color so contours are not really detected. To have a good detection, the hand must be next to the face, in front of another coloured background. Constraints are too heavy to use this technology. We stay on Intel technology.

Week 5 (February 24 - March 2)

- Implementation of movements in our gesture recognition algorithm : thanks to mathematical equations, we can now detect rectilinear, elliptical movements or say if the user doesn't move.

- Symbol recognition "closed fist", that we can find in many gestures.

- Discussion about : how save the dictionnary in user HDD. We choose an XML file.

- Implementation of encryption mecanism to prevent the user to see the XML file. Indead, this file shows how our program is functionning.

Week 6 (March 3 - March 9)

- Implementation of user interface for our "Skype"-like program.

- Encryption mecanism available

- Creation of a program to try to send image data thanks to different methods

- Writing of a complete report with technical details

- Adding I LOVE YOU in the dictionnary

- Matter to integrate interface with our recognition program did past weeks. A problem between the thread "interface" and thread "motor".

Week 7 (March 10 - March 16)

- Mid-term oral presentation

- Integration of Thread Interface with Thread motor.

Week 8 (March 10 - March 23)

- Adding AIRPLANE in the dictionnary

- Adding ALL in the dictionnary

- Creation of the dictionnary in XML

- Integration of XML parser in the program and the gesture tree is built thanks to this dictionnary.

Week 9 (March 24 - March 30)

- Adding the new feature : vocal synthesis

- Adding UP in the dictionnary

- Adding ACCIDENT in the dictionnary

- Adding OBSTINATE in the dictionnary

- Correcting bugs when adding nodes in the movement tree

- Correcting bugs in Machine learning

Week 10 (March 31 - April 06)

- Preparation of final oral

- Flyers creation

- Writing of developper manual