Proj-2012-2013-OAR-Cloud: Difference between revisions

Jordan.Calvi (talk | contribs) |

Jordan.Calvi (talk | contribs) (→LXC) |

||

| Line 145: | Line 145: | ||

At boot time, a virtual machines reads the file /var/lib/lxc/{VM-name}/config to set up its configuration (root file system, number of TTY, limites, etc). |

At boot time, a virtual machines reads the file /var/lib/lxc/{VM-name}/config to set up its configuration (root file system, number of TTY, limites, etc). |

||

<PRE> |

|||

lxc.network.type=veth |

|||

lxc.network.link=lxcbr0 |

|||

lxc.network.flags=up |

|||

lxc.network.hwaddr = 00:16:3e:24:e5:9a |

|||

lxc.utsname = ubuntu1 |

|||

lxc.devttydir = lxc |

|||

lxc.tty = 4 |

|||

lxc.pts = 1024 |

|||

lxc.rootfs = /var/lib/lxc/ubuntu1/rootfs |

|||

lxc.mount = /var/lib/lxc/ubuntu1/fstab |

|||

lxc.arch = amd64 |

|||

lxc.cap.drop = sys_module mac_admin |

|||

lxc.pivotdir = lxc_putold |

|||

# uncomment the next line to run the container unconfined: |

|||

#lxc.aa_profile = unconfined |

|||

lxc.cgroup.devices.deny = a |

|||

# Allow any mknod (but not using the node) |

|||

lxc.cgroup.devices.allow = c *:* m |

|||

lxc.cgroup.devices.allow = b *:* m |

|||

# /dev/null and zero |

|||

lxc.cgroup.devices.allow = c 1:3 rwm |

|||

lxc.cgroup.devices.allow = c 1:5 rwm |

|||

# consoles |

|||

lxc.cgroup.devices.allow = c 5:1 rwm |

|||

lxc.cgroup.devices.allow = c 5:0 rwm |

|||

#lxc.cgroup.devices.allow = c 4:0 rwm |

|||

#lxc.cgroup.devices.allow = c 4:1 rwm |

|||

# /dev/{,u}random |

|||

lxc.cgroup.devices.allow = c 1:9 rwm |

|||

lxc.cgroup.devices.allow = c 1:8 rwm |

|||

lxc.cgroup.devices.allow = c 136:* rwm |

|||

lxc.cgroup.devices.allow = c 5:2 rwm |

|||

# rtc |

|||

lxc.cgroup.devices.allow = c 254:0 rwm |

|||

#fuse |

|||

lxc.cgroup.devices.allow = c 10:229 rwm |

|||

#tun |

|||

lxc.cgroup.devices.allow = c 10:200 rwm |

|||

#full |

|||

lxc.cgroup.devices.allow = c 1:7 rwm |

|||

#hpet |

|||

lxc.cgroup.devices.allow = c 10:228 rwm |

|||

#kvm |

|||

lxc.cgroup.devices.allow = c 10:232 rwm |

|||

</PRE> |

|||

<b><u>Configuring default network and switch</u></b> |

<b><u>Configuring default network and switch</u></b> |

||

Revision as of 15:28, 18 February 2013

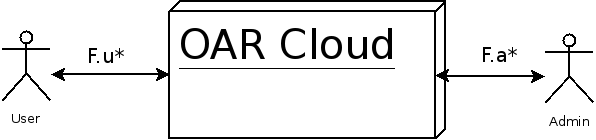

OAR Cloud Project

This project aims at creating a light cloud computing architecture on top of the batch scheduler OAR.

Project Members

This project is proposed by:

Olivier Richard - Teacher and researcher in RICM's Polytech Grenoble training

Three students from RICM are working on it:

- Jordan Calvi (RICM4)

- Alexandre Maurice (RICM4)

- Michael Mercier (RICM5)

Conception

Context

There is two kind of actors that are dealing with OAR cloud, users and administrators. The F.u* and the F.a* are the user and Administrator features describe below.

Features

User

Main features:

F.u.0 Connect to an account

F.u.1 Launch and configure one or more instances

F.u.2 Deploy an image on one or more instances

F.u.3 Modify and save images

F.u.4 Setup alarms based on rules using metrics

F.u.5 Being inform by e-mail and/or notification for interesting events

Advanced features:

F.u.6 Automated resize of an instance (adapt the resources) using predefined rules and schedule

F.u.7 Load balancing between several instances

F.u.8 Advanced Network configuration for user: ACL, subnets, VPN...

Administrator

F.a.0 Create/delete user account

F.a.1 Add/remove and manage resources

F.a.2 Visualize resources and instances states

F.a.3 Install and update nodes operating systems

F.a.4 Handle users access rights

F.a.5 Setup alarms based on rules using metrics

F.a.6 Being inform by e-mail and/or notification for interesting events

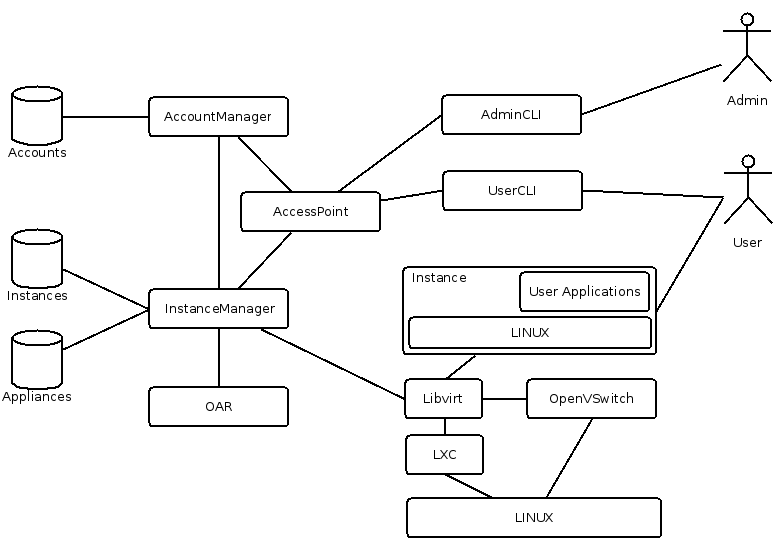

Logical View

Here is the logical view of the OAR Cloud system. Every component on this diagram represents a software component type. The links between these components represent the communication between them.

Description of the main components:

- AccountManager

- Handle users and admins access rights

- AccessPoint

- The system access point reached by the different access tools

- InstanceManager

- Manage the creation, configuration and deletion of instances all over the severals nodes. It also handles the appliances persistence and deployment

- UserCLI & AdminCLI

- Command line access tools for users and admins

Architecture

Libvirt and LXC

LXC

LXC is a lightweight hypervisor allowing to run isolated appliances. Indeed, it provides a virtual environment that has its own process and network space. It is similar to a chroot. As LXC is implemented on given linux kernel, only operating systems that are compatible with the hosting kernel will be able to run. It is based on cgroups (control groups), a Linux kernel feature to manage ressources like CPU, memory and disk I/O by limiting resources, prioritizing groups, accounting (measuring), isolating (separate namespaces for groups, it means processes, network connections and files are not visible by other groups) and controling groups.

Installation

/!\ LXC as been set up succesfully on ubuntu 12.04 LTS as container launching does not works on Debian Wheeze testing OS. /!\

Packages installation

aptitude install lxc bridge-utils debootstrap- /?\ Conteners will be placed in /var/lib/lxc /?\

Mounting cgroups automatically : edit /etc/fstab and add the following

cgroup /sys/fs/cgroup cgroup defaults 0 0

Enabling previous modifications

mount -a

Checking everything is ok

lxc-checkconfig

Manipulation of containers

Creating a container running Ubuntu

lxc-create -t ubuntu -n ubuntu1- /!\ By default, the version of the guest OS is the same as the hosting one. /!\

Showing existing containers and thoses that are running

lxc-ls- /?\ The first line indicates existing containers and the second one thoses in running state. /?\

Obtaining information about ubuntu1

lxc-info -n ubuntu1

Starting the container

lxc-start -n ubuntu1

Connection to the container

lxc-console -n ubuntu1

Shutting down the container

lxc-stop -n ubuntu1

Exiting console

- perform

CTRL-a q

Deleting the container

virsh -c lxc:/// undefine ubuntu1

Configuring the container

At boot time, a virtual machines reads the file /var/lib/lxc/{VM-name}/config to set up its configuration (root file system, number of TTY, limites, etc).

lxc.network.type=veth

lxc.network.link=lxcbr0

lxc.network.flags=up

lxc.network.hwaddr = 00:16:3e:24:e5:9a

lxc.utsname = ubuntu1

lxc.devttydir = lxc

lxc.tty = 4

lxc.pts = 1024

lxc.rootfs = /var/lib/lxc/ubuntu1/rootfs

lxc.mount = /var/lib/lxc/ubuntu1/fstab

lxc.arch = amd64

lxc.cap.drop = sys_module mac_admin

lxc.pivotdir = lxc_putold

# uncomment the next line to run the container unconfined:

#lxc.aa_profile = unconfined

lxc.cgroup.devices.deny = a

# Allow any mknod (but not using the node)

lxc.cgroup.devices.allow = c *:* m

lxc.cgroup.devices.allow = b *:* m

# /dev/null and zero

lxc.cgroup.devices.allow = c 1:3 rwm

lxc.cgroup.devices.allow = c 1:5 rwm

# consoles

lxc.cgroup.devices.allow = c 5:1 rwm

lxc.cgroup.devices.allow = c 5:0 rwm

#lxc.cgroup.devices.allow = c 4:0 rwm

#lxc.cgroup.devices.allow = c 4:1 rwm

# /dev/{,u}random

lxc.cgroup.devices.allow = c 1:9 rwm

lxc.cgroup.devices.allow = c 1:8 rwm

lxc.cgroup.devices.allow = c 136:* rwm

lxc.cgroup.devices.allow = c 5:2 rwm

# rtc

lxc.cgroup.devices.allow = c 254:0 rwm

#fuse

lxc.cgroup.devices.allow = c 10:229 rwm

#tun

lxc.cgroup.devices.allow = c 10:200 rwm

#full

lxc.cgroup.devices.allow = c 1:7 rwm

#hpet

lxc.cgroup.devices.allow = c 10:228 rwm

#kvm

lxc.cgroup.devices.allow = c 10:232 rwm

Configuring default network and switch

- /etc/default/lxc

Libvirt

Installation

Packages installation

apt-get install libvirt-bin

Creating an XML file configuration to import an existing container in libvirt

- /!\ Notice that libvirt can not install am OS in a container. Therefore, an LXC container with an OS must have been set up previously (that was the we saw before). Then, the file system directory will be given to libvirt when importing the VM. /!\

- In order to create a libvirt container, an XML file describing the VM we want to import must be filled. There is a sample of such an XML file that belong to VM "ubuntu1" we have just created :

<domain type='lxc'

<name>ubuntu1</name>

<memory>332768</memory>

<os>

<type>exe</type>

<init>/sbin/init</init>

</os>

<vcpu>1</vcpu>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<devices>

<emulator>/usr/lib/libvirt/libvirt_lxc</emulator>

<filesystem type='mount'>

<source dir='/var/lib/lxc/ubuntu1/rootfs'/>

<target dir='/'/>

</filesystem>

<interface type='network'>

<source network='default'/>

</interface>

<console type='pty' />

</devices>

</domain>

Setting the container as a libvirt one

virsh --connect lxc:/// define ubuntu1.xml

Booting the container

virsh -c lxc:/// start ubuntu1

Connecting to the container localy

virsh -c lxc:/// console ubuntu1

Connecting to the container remotly

virsh -c lxc+{IPDEST}:/// console ubuntu1

Shutting the container

virsh -c lxc:/// destroy ubuntu1

Deleting the container

virsh -c lxc:/// undefine ubuntu1

Problemes

- Using Ubuntu as a host,when connecting to a libvirt VM running Debian, the guest appliance waits for the user to log in through two interfaces at a time (tty1 and console), so it is not possible to get identified.

- Using Debian Wheeze as a host, when connecting to a libvirt VM, the console does not offer the user the possibility to log in. However, when using directly LXC there is no issue.

Open vSwitch

TODO list

- Explore Amazon Elastic Compute Cloud API

- Understand technologies:

- OAR

- LXC

- Libvirt

- OpenVSwitch

- find out how to handle dynamic jobs

Journal

04/02

- We have specified the subject

- Distribute the work between us.

- Jordan: LXC and Libvirt

- Alexandre: OpenVSwitch and Libvirt

- Michael: OAR and global architecture