RobAIR-ICSOC12: Difference between revisions

No edit summary |

No edit summary |

||

| (42 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[Image:wifibot-configuration.jpg|200px|thumb|right|WifiBot configuration]] |

|||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

[[Image:wifibot-with-geiger-gas.jpg|200px|thumb|right|WifiBot with geiger counters and toxic gas sensors]] |

[[Image:wifibot-with-geiger-gas.jpg|200px|thumb|right|WifiBot with geiger counters and toxic gas sensors]] |

||

[[Image:Wifibot_hokuyo_lidar.jpg|200px|thumb|right|WifiBot's Lidar]] |

[[Image:Wifibot_hokuyo_lidar.jpg|200px|thumb|right|WifiBot's Lidar]] |

||

[[Image:Wifibot_spherewebcam.jpg|200px|thumb|right|WifiBot's PTZ Webcam]] |

|||

[[Image:sparkfungeiger.jpg|200px|thumb|right|Onboard USB geiger counter]] |

|||

[[Image:UltrasonicScanner.jpg|200px|thumb|right|Three HC-SR04 Ultrasonic Sensors on a tilt bracket]] |

|||

[[Image:upnp.robair.png|200px|thumb|right|RobAIR UPnP Device]] |

|||

[[Image:Wifibot-lidar.png|200px|thumb|right|WifiBot's Lidar Pilot Widget]] |

[[Image:Wifibot-lidar.png|200px|thumb|right|WifiBot's Lidar Pilot Widget]] |

||

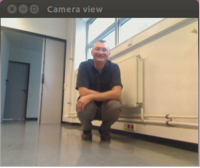

[[Image:Wifibot-cam.png|200px|thumb|right|WifiBot's Camera Pilot Widget]] |

[[Image:Wifibot-cam.png|200px|thumb|right|WifiBot's Camera Pilot Widget]] |

||

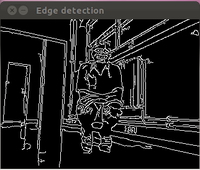

[[Image:Wifibot-edge.png|200px|thumb|right|WifiBot's Edge Detection Pilot Widget]] |

[[Image:Wifibot-edge.png|200px|thumb|right|WifiBot's Edge Detection Pilot Widget]] |

||

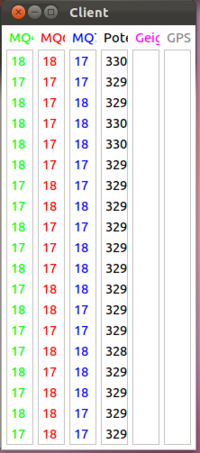

[[Image:Wifibot-sensors.png|200px|thumb|right|WifiBot's Sensors Pilot Widget]] |

[[Image:Wifibot-sensors.png|200px|thumb|right|WifiBot's Sensors Pilot Widget]] |

||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

[[Media:FlyerICSOC12A4.pdf]] |

|||

[[Media:PosterICSOC12A3.pdf]] |

|||

==Summary== |

==Summary== |

||

| Line 27: | Line 37: | ||

==Hardware== |

==Hardware== |

||

===Robot=== |

===Robot=== |

||

[[RobAIR]] is based on the [[Wifibot]] robot. |

[[RobAIR]] (Robot for Ambient Intelligent Room) is based on the [[Wifibot]] robot. |

||

The Wifibot had a chassis with 4 wheels geared by 4 DC motors with hall encoders. The Wifibot is controlled by an Atom D510 main board running Linux ( |

The Wifibot had a chassis with 4 wheels geared by 4 DC motors with hall encoders. The Wifibot is controlled by an Atom D510 main board running under Linux (Kubuntu 10.04) or under Windows CE. |

||

Wifibot' nuiltin sensors are |

Wifibot' nuiltin sensors are |

||

* PTZ webcam[http://www.logitech.com/en-us/38/3480 Logitech QuickCam® Orbit AF] ou [[Logitech Quickcam Sphere AF]] |

* PTZ webcam[http://www.logitech.com/en-us/38/3480 Logitech QuickCam® Orbit AF] ou [[Logitech Quickcam Sphere AF]] |

||

* Hokuyo [[Lidar]] ([http://www.hokuyo-aut.jp/02sensor/07scanner/urg_04lx_ug01.html URG-04LX-UG01]) |

* Hokuyo [[Lidar]] ([http://www.hokuyo-aut.jp/02sensor/07scanner/urg_04lx_ug01.html URG-04LX-UG01] [http://www.hokuyo-aut.jp/02sensor/07scanner/download/products/urg-04lx-ug01/data/URG_SCIP20.pdf communication protocol spec.]) |

||

* Infrared sensors (left and right) |

* Infrared sensors (left and right) |

||

* Battery level sensors |

* Battery level sensors |

||

| Line 39: | Line 49: | ||

For the demonstration, we have plugged several additionnal sensors on the available USB ports (''remark: USB hubs are not correctly managed by the current OS distribution''). Several analog and digital sensors are soldered on an Arduino shield piggybacked on an [[Arduino]] UNO board. |

For the demonstration, we have plugged several additionnal sensors on the available USB ports (''remark: USB hubs are not correctly managed by the current OS distribution''). Several analog and digital sensors are soldered on an Arduino shield piggybacked on an [[Arduino]] UNO board. |

||

* [[Geiger counter]] Sensor |

* [[Geiger counter]] Sensor |

||

* |

* Arduino board with: |

||

** LPG gas sensor ([https://www.sparkfun.com/products/9405 MQ-6]) |

|||

| ⚫ | |||

** Methane gas sensor ([https://www.sparkfun.com/products/9404 MQ-4]) |

|||

| ⚫ | |||

** Carbon monoxyde gas sensor ([https://www.sparkfun.com/products/9403 MQ-7]) |

|||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

Extra sensors are |

Extra sensors are |

||

* [[XBox Kinect]] for depth images and webcam |

* [[XBox Kinect]] for depth images and webcam |

||

* [[139741 Arduino Infrared Obstacle Avoidance Detection Photoelectric Sensor]] for stair/hall detection (Arduino board) |

* [[139741 Arduino Infrared Obstacle Avoidance Detection Photoelectric Sensor]] for stair/hall detection (Arduino board) |

||

| ⚫ | |||

Extra actuators are |

Extra actuators are |

||

* [[135038 Arduino Flame Detection Sensor Module]] (Arduino board) |

|||

* [http://www.seeedstudio.com/depot/scream-out-loud-110dba-fixed-tone-siren-p-301.html?cPath=156_159 scream out loud - 110dBA fixed tone Siren] (controlled by a relay on the Arduino board) |

* [http://www.seeedstudio.com/depot/scream-out-loud-110dba-fixed-tone-siren-p-301.html?cPath=156_159 scream out loud - 110dBA fixed tone Siren] (controlled by a relay on the Arduino board) |

||

===Pilot's Console=== |

===Pilot's Console=== |

||

* PC with USB game controller ou Nunchuck+[[Arduino]] UNO |

* PC with USB game controller ou [[Nunchuck]]+[[Arduino]] UNO |

||

* Android phone or tablet |

* Android phone or tablet |

||

==Software== |

==Software== |

||

===Component architecture=== |

===Component architecture=== |

||

The robot's embedded software and the pilot's console are developed using 2 SCA composites. Inner components are developped in Python and in Java/OSGi. Components and Composites are bound using four types of SCA bindings : direct reference, local socket, UPnP, XMPP/Jingle. |

The robot's embedded software and the pilot's console are developed using 2 [http://www.oasis-opencsa.org/sca/ SCA] composites. Inner components are developped in Python and in Java/OSGi. Components and Composites are bound using four types of SCA bindings : direct reference, local socket, UPnP, XMPP/Jingle (and SIP/Simple but not in this demonstration). |

||

The SCA containers are : NaSCAr/iPOJO for Java/OSGi and [http://ipopo.coderxpress.net iPOPO/Pelix] for Python. |

The SCA containers are : NaSCAr/iPOJO for Java/OSGi and [http://ipopo.coderxpress.net iPOPO/Pelix] for Python. iPOPO components wraps C/C++ native libraries ([[Robot Operating System|ROS]]) and scripts ([[Urbi|Urbiscript]]). |

||

===Services and API=== |

===Services and API=== |

||

====XMPP/JINGLE==== |

|||

* [[File:upnp.robot.device.xml|UPnP Robot DCP]] |

|||

We have modified a GTalk-compliant user-agent ([[JISI]]) according to the robot and the pilot requirements. |

|||

** [[File:upnp.chassis.service.xml|UPnP Chassis Service]] |

|||

====UPnP Profiles==== |

|||

** [[File:upnp.sensor.service.xml|UPnP Sensor Service]] |

|||

The Robot publishes 1 UPnP device profile and 6 UPnP service types. |

|||

** [[File:upnp.xmpp.service.xml|UPnP XMPP Service]] |

|||

* Robot1.xml ([[UPnP-Robair-Robot|file]]) |

|||

** Chassis1.xml ([[UPnP-Robair-Chassis1|file]]) for driving the chassis |

|||

** Sensor1.xml ([[UPnP-Robair-Sensor1|file]]) for analog sensors |

|||

** CompositeSensor1.xml ([[UPnP-Robair-CompositeSensor1|file]]) for multiple value analog sensors |

|||

** DistanceSensor1.xml([[UPnP-Robair-DistanceSensor1|file]]) for Lidar, IR and Ultrasonic distance sensors |

|||

** PositionSensor1.xml ([[UPnP-Robair-PositionSensor1|file]]) for GPS |

|||

** IM1.xml ([[UPnP-Robair-IM1|file]]) for Instant Message and Visioconferencing |

|||

''Remark: The Robot1.xml device profile embeds optionally a device with the standardized [http://upnp.org/specs/ha/digitalsecuritycamera/ Digital Security Camera Profile (DCP)] for each on-board webcam (or Kinect).'' |

|||

====SCA Binding==== |

|||

OASIS had already specified SCA bindings for main stream protocols used in B2B and EAI. |

|||

Our goal is to defined SCA bindings for protocols widely accepted in SOHO (Small Office Home Office) networks (ie UPnP) and in videoconferencing (ie XMPP/Jingle and SIP/SIMPLE). |

|||

=====UPnP SCA Binding===== |

|||

The UPnP SCA Binding implements 1 UPnP device profile and 6 UPnP service types. |

|||

=====IM Binding===== |

|||

The IM SCA Binding implements the session establishment for XMPP/Jingle. |

|||

==Video== |

==Video== |

||

| Line 77: | Line 104: | ||

==References== |

==References== |

||

* Calmant, T., Américo, J.C., Gattaz, O., Donsez, D., and Gama, K.: A dynamic and service-oriented component model for Python long-lived applications. In Proceedings of the 15th ACM SIGSOFT Symposium on Component-Based Software Engineering (2012) pp. 35–40. |

* Calmant, T., Américo, J.C., Gattaz, O., Donsez, D., and Gama, K.: A dynamic and service-oriented component model for Python long-lived applications. In Proceedings of the 15th ACM SIGSOFT Symposium on Component-Based Software Engineering (2012) pp. 35–40. website http://ipopo.coderxpress.net/ |

||

* Américo, J.C., and Donsez, D.: Service Component Architecture Extensions for Dynamic Systems. Accepted for the 10th Int’l Conference on Service-Oriented Computing (2012) |

* Américo, J.C., and Donsez, D.: Service Component Architecture Extensions for Dynamic Systems. Accepted for the 10th Int’l Conference on Service-Oriented Computing (2012) |

||

* Source repository : https://github.com/tcalmant/robair |

|||

==Gallery== |

|||

[[Image:icsoc2012-demo-a.jpg|300px|Joao demonstrating RobAIR @ ICSOC 2012]] [[Image:icsoc2012-demo-b.jpg|300px|Joao demonstrating RobAIR @ ICSOC 2012]] |

|||

Latest revision as of 10:43, 3 December 2012

A Dynamic SCA-based System for Smart Homes and Offices

Authors: Thomas Calmant, Joao Claudio Américo, Didier Donsez and Olivier Gattaz

DEMO PROPOSAL FOR ICSOC 2012 http://www.icsoc.org

Media:FlyerICSOC12A4.pdf Media:PosterICSOC12A3.pdf

Summary

We demonstrate the interoperability and the dynamism capabilities in SCA-based systems in the context of robotic for smart habitats. These capabilities are due to two developed tools: a Python-based OSGi runtime and service-oriented component model (Pelix and iPOPO, respectively) and a tool to publish SCA services as OSGi services (NaSCAr). By this, we have developed a robot service and a pilot' user-agent, which can dynamically add and remove sensors and widgets. This use case follows and responds to the ubiquitous computing trend and the runtime adaptivity needed in such systems.

Keywords: SCA, Service-Oriented Architectures, Component-Based Design, Dynamic Adaptation, Smart Habitats, Service Robotic

Hardware

Robot

RobAIR (Robot for Ambient Intelligent Room) is based on the Wifibot robot. The Wifibot had a chassis with 4 wheels geared by 4 DC motors with hall encoders. The Wifibot is controlled by an Atom D510 main board running under Linux (Kubuntu 10.04) or under Windows CE.

Wifibot' nuiltin sensors are

- PTZ webcamLogitech QuickCam® Orbit AF ou Logitech Quickcam Sphere AF

- Hokuyo Lidar (URG-04LX-UG01 communication protocol spec.)

- Infrared sensors (left and right)

- Battery level sensors

- Odometer

For the demonstration, we have plugged several additionnal sensors on the available USB ports (remark: USB hubs are not correctly managed by the current OS distribution). Several analog and digital sensors are soldered on an Arduino shield piggybacked on an Arduino UNO board.

- Geiger counter Sensor

- Arduino board with:

- LPG gas sensor (MQ-6)

- Methane gas sensor (MQ-4)

- Carbon monoxyde gas sensor (MQ-7)

- Flame Detection Sensor for flame detection

- Ultrasonic Distance Sensor

- Wii Motion Plus I2C Gyroscope

- Inforad K1 NMEA 0183 GPS Receiver

Extra sensors are

- XBox Kinect for depth images and webcam

- 139741 Arduino Infrared Obstacle Avoidance Detection Photoelectric Sensor for stair/hall detection (Arduino board)

Extra actuators are

- scream out loud - 110dBA fixed tone Siren (controlled by a relay on the Arduino board)

Pilot's Console

Software

Component architecture

The robot's embedded software and the pilot's console are developed using 2 SCA composites. Inner components are developped in Python and in Java/OSGi. Components and Composites are bound using four types of SCA bindings : direct reference, local socket, UPnP, XMPP/Jingle (and SIP/Simple but not in this demonstration). The SCA containers are : NaSCAr/iPOJO for Java/OSGi and iPOPO/Pelix for Python. iPOPO components wraps C/C++ native libraries (ROS) and scripts (Urbiscript).

Services and API

XMPP/JINGLE

We have modified a GTalk-compliant user-agent (JISI) according to the robot and the pilot requirements.

UPnP Profiles

The Robot publishes 1 UPnP device profile and 6 UPnP service types.

- Robot1.xml (file)

- Chassis1.xml (file) for driving the chassis

- Sensor1.xml (file) for analog sensors

- CompositeSensor1.xml (file) for multiple value analog sensors

- DistanceSensor1.xml(file) for Lidar, IR and Ultrasonic distance sensors

- PositionSensor1.xml (file) for GPS

- IM1.xml (file) for Instant Message and Visioconferencing

Remark: The Robot1.xml device profile embeds optionally a device with the standardized Digital Security Camera Profile (DCP) for each on-board webcam (or Kinect).

SCA Binding

OASIS had already specified SCA bindings for main stream protocols used in B2B and EAI. Our goal is to defined SCA bindings for protocols widely accepted in SOHO (Small Office Home Office) networks (ie UPnP) and in videoconferencing (ie XMPP/Jingle and SIP/SIMPLE).

UPnP SCA Binding

The UPnP SCA Binding implements 1 UPnP device profile and 6 UPnP service types.

IM Binding

The IM SCA Binding implements the session establishment for XMPP/Jingle.

Video

COMING SOON

Acknowledgement

- Olivier Aycard

References

- Calmant, T., Américo, J.C., Gattaz, O., Donsez, D., and Gama, K.: A dynamic and service-oriented component model for Python long-lived applications. In Proceedings of the 15th ACM SIGSOFT Symposium on Component-Based Software Engineering (2012) pp. 35–40. website http://ipopo.coderxpress.net/

- Américo, J.C., and Donsez, D.: Service Component Architecture Extensions for Dynamic Systems. Accepted for the 10th Int’l Conference on Service-Oriented Computing (2012)

- Source repository : https://github.com/tcalmant/robair